The AWS Certified Developer – Associate Sample Exam

0 of 10 questions completed

Questions:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

Information

This Sample Test contains 10 Exam Questions. Please fill your Name and Email address and Click on “Start Test”. You can view the results at the end of the test. You will also receive an email with the results. Please purchase to get life time access to Full Practice Tests.

|

You must specify a text. |

|

|

You must specify an email address. |

You have already completed the Test before. Hence you can not start it again.

Test is loading...

You must sign in or sign up to start the Test.

You have to finish following quiz, to start this Test:

Your results are here!! for" AWS Certified Developer Associate Sample Exam "

0 of 10 questions answered correctly

Your time:

Time has elapsed

Your Final Score is : 0

You have attempted : 0

Number of Correct Questions : 0 and scored 0

Number of Incorrect Questions : 0 and Negative marks 0

-

AWS Certified Developer Associate

You have attempted: 0

Number of Correct Questions: 0 and scored 0

Number of Incorrect Questions: 0 and Negative marks 0

-

You can review your answers by clicking on “View Answers”.

Important Note : Open Reference Documentation Links in New Tab (Right Click and Open in New Tab).

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- Answered

- Review

-

Question 1 of 10

1. Question

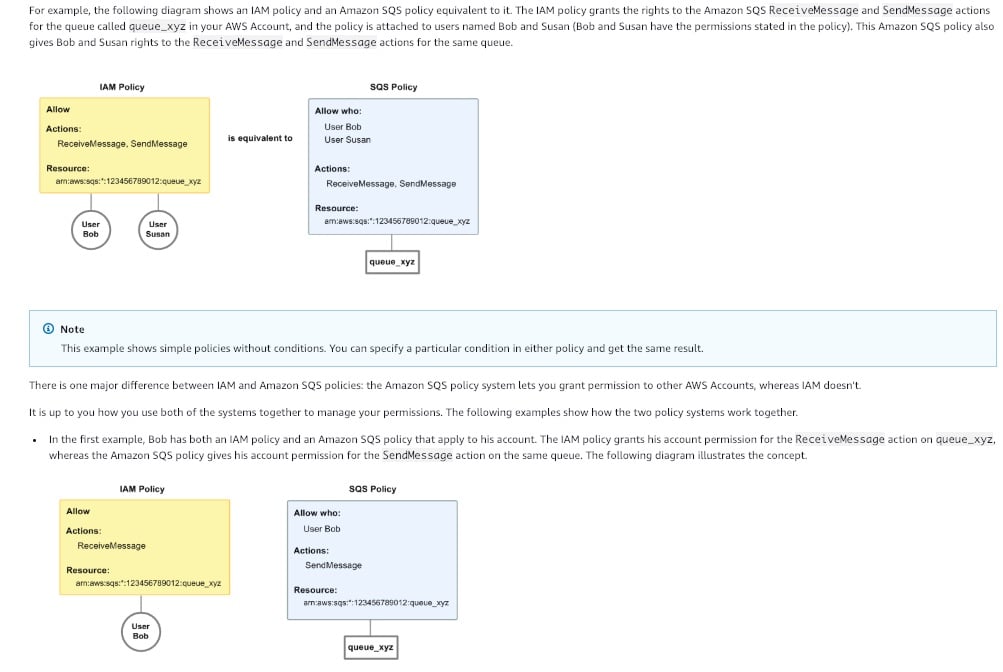

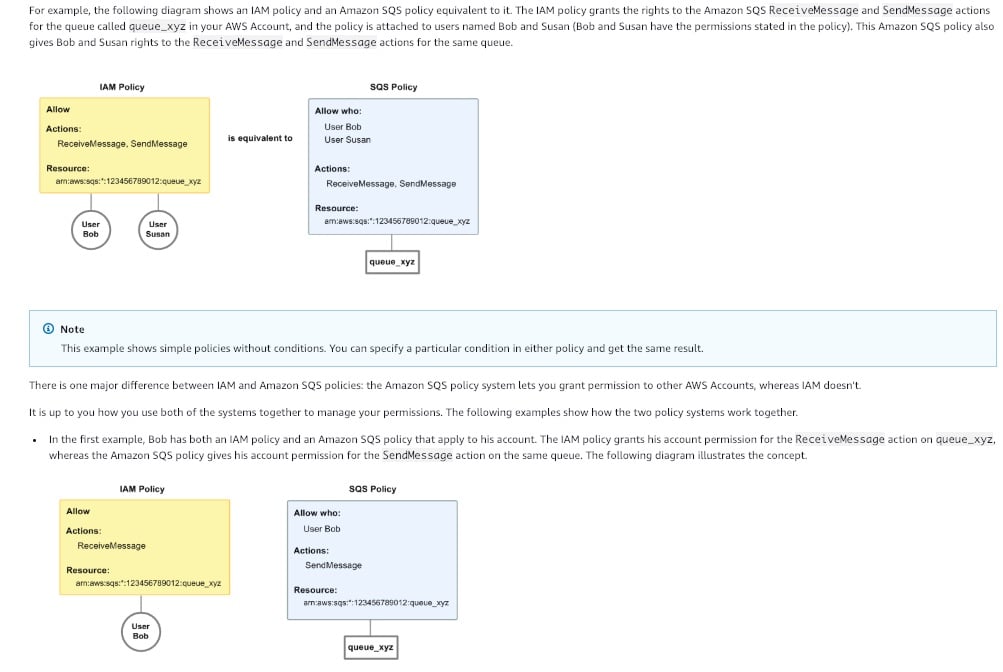

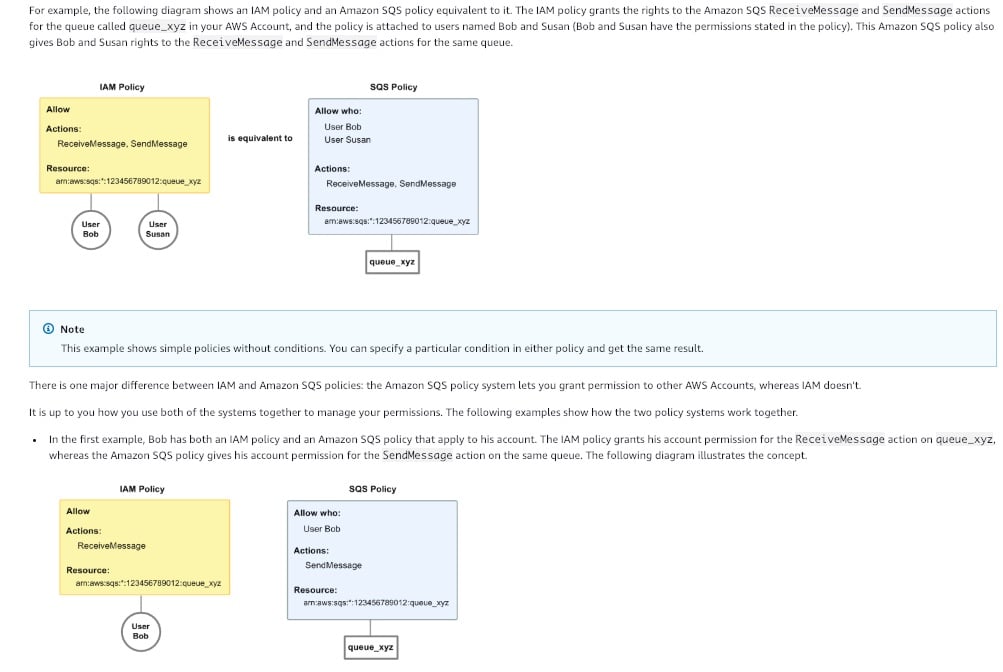

A user has an IAM policy as well as an Amazon SQS policy that apply to his account. The IAM policy grants his account permission for the ReceiveMessage action on example_queue, whereas the Amazon SQS policy gives his account permission for the SendMessage action on the same queue.

Considering the permissions above, which of the following options are correct? (Select two)Correct

The user can send a ReceiveMessage request to example_queue, the IAM policy allows this action

The user has both an IAM policy and an Amazon SQS policy that apply to his account. The IAM policy grants his account permission for the ReceiveMessage action on example_queue, whereas the Amazon SQS policy gives his account permission for the SendMessage action on the same queue.

How IAM policy and SQS policy work in tandem:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html

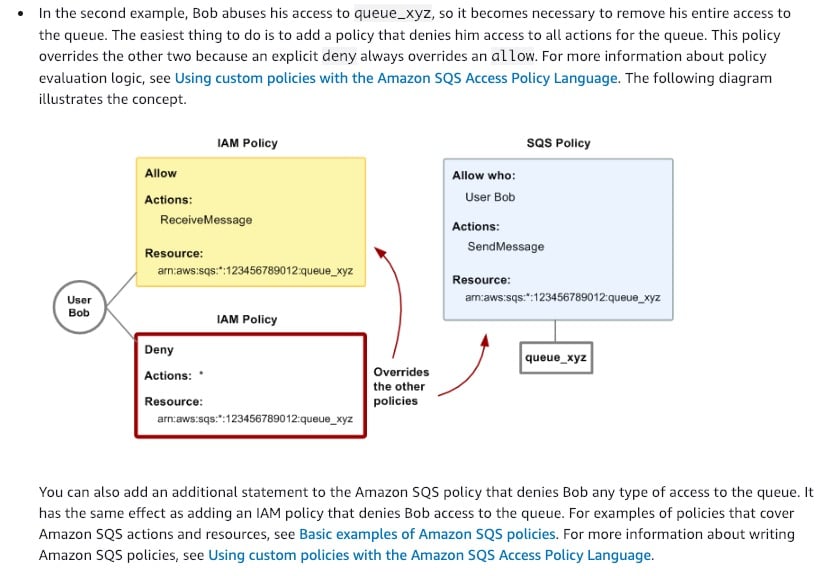

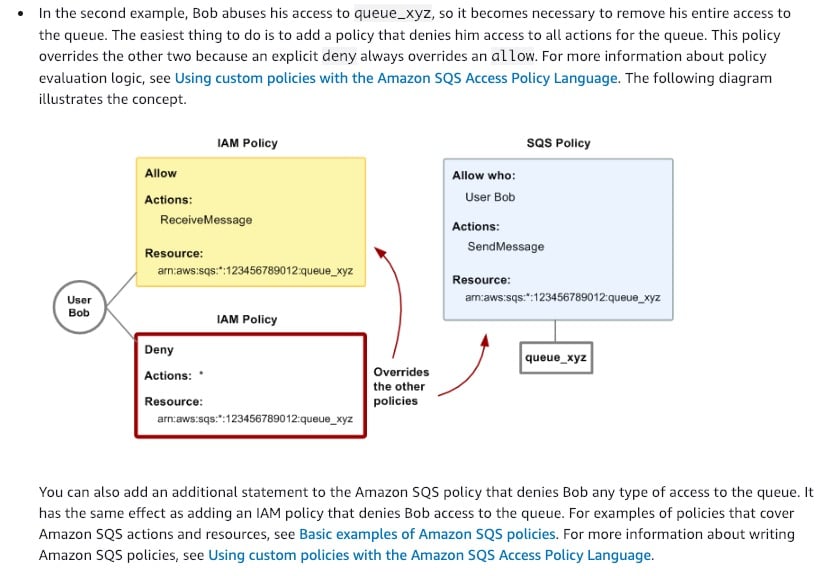

If you add a policy that denies the user access to all actions for the queue, the policy will override the other two policies and the user will not have access to example_queue

To remove the user‘s full access to the queue, the easiest thing to do is to add a policy that denies him access to all actions for the queue. This policy overrides the other two because an explicit deny always overrides an allow.

You can also add an additional statement to the Amazon SQS policy that denies the user any type of access to the queue. It has the same effect as adding an IAM policy that denies the user access to the queue.

How IAM policy and SQS policy work in tandem:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html

Incorrect options:

If the user sends a SendMessage request to example_queue, the IAM policy will deny this action – If the user sends a SendMessage request to example_queue, the Amazon SQS policy allows the action. The IAM policy has no explicit deny on this action, so it plays no part.

Either of IAM policies or Amazon SQS policies should be used to grant permissions. Both cannot be used together – There are two ways to give your users permissions to your Amazon SQS resources: using the Amazon SQS policy system and using the IAM policy system. You can use one or the other, or both. For the most part, you can achieve the same result with either one.

Adding only an IAM policy to deny the user of all actions on the queue is not enough. The SQS policy should also explicitly deny all action – The user can be denied access using any one of the policies. Explicit deny in any policy will override all other allow actions defined using either of the policies.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.htmlIncorrect

The user can send a ReceiveMessage request to example_queue, the IAM policy allows this action

The user has both an IAM policy and an Amazon SQS policy that apply to his account. The IAM policy grants his account permission for the ReceiveMessage action on example_queue, whereas the Amazon SQS policy gives his account permission for the SendMessage action on the same queue.

How IAM policy and SQS policy work in tandem:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html

If you add a policy that denies the user access to all actions for the queue, the policy will override the other two policies and the user will not have access to example_queue

To remove the user‘s full access to the queue, the easiest thing to do is to add a policy that denies him access to all actions for the queue. This policy overrides the other two because an explicit deny always overrides an allow.

You can also add an additional statement to the Amazon SQS policy that denies the user any type of access to the queue. It has the same effect as adding an IAM policy that denies the user access to the queue.

How IAM policy and SQS policy work in tandem:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html

Incorrect options:

If the user sends a SendMessage request to example_queue, the IAM policy will deny this action – If the user sends a SendMessage request to example_queue, the Amazon SQS policy allows the action. The IAM policy has no explicit deny on this action, so it plays no part.

Either of IAM policies or Amazon SQS policies should be used to grant permissions. Both cannot be used together – There are two ways to give your users permissions to your Amazon SQS resources: using the Amazon SQS policy system and using the IAM policy system. You can use one or the other, or both. For the most part, you can achieve the same result with either one.

Adding only an IAM policy to deny the user of all actions on the queue is not enough. The SQS policy should also explicitly deny all action – The user can be denied access using any one of the policies. Explicit deny in any policy will override all other allow actions defined using either of the policies.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.htmlUnattempted

The user can send a ReceiveMessage request to example_queue, the IAM policy allows this action

The user has both an IAM policy and an Amazon SQS policy that apply to his account. The IAM policy grants his account permission for the ReceiveMessage action on example_queue, whereas the Amazon SQS policy gives his account permission for the SendMessage action on the same queue.

How IAM policy and SQS policy work in tandem:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html

If you add a policy that denies the user access to all actions for the queue, the policy will override the other two policies and the user will not have access to example_queue

To remove the user‘s full access to the queue, the easiest thing to do is to add a policy that denies him access to all actions for the queue. This policy overrides the other two because an explicit deny always overrides an allow.

You can also add an additional statement to the Amazon SQS policy that denies the user any type of access to the queue. It has the same effect as adding an IAM policy that denies the user access to the queue.

How IAM policy and SQS policy work in tandem:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html

Incorrect options:

If the user sends a SendMessage request to example_queue, the IAM policy will deny this action – If the user sends a SendMessage request to example_queue, the Amazon SQS policy allows the action. The IAM policy has no explicit deny on this action, so it plays no part.

Either of IAM policies or Amazon SQS policies should be used to grant permissions. Both cannot be used together – There are two ways to give your users permissions to your Amazon SQS resources: using the Amazon SQS policy system and using the IAM policy system. You can use one or the other, or both. For the most part, you can achieve the same result with either one.

Adding only an IAM policy to deny the user of all actions on the queue is not enough. The SQS policy should also explicitly deny all action – The user can be denied access using any one of the policies. Explicit deny in any policy will override all other allow actions defined using either of the policies.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-using-identity-based-policies.html -

Question 2 of 10

2. Question

A data analytics company with its IT infrastructure on the AWS Cloud wants to build and deploy its flagship application as soon as there are any changes to the source code.

As a Developer Associate, which of the following options would you suggest to trigger the deployment? (Select two)Correct

Keep the source code in an AWS CodeCommit repository and start AWS CodePipeline whenever a change is pushed to the CodeCommit repository

Keep the source code in an Amazon S3 bucket and start AWS CodePipeline whenever a file in the S3 bucket is updated

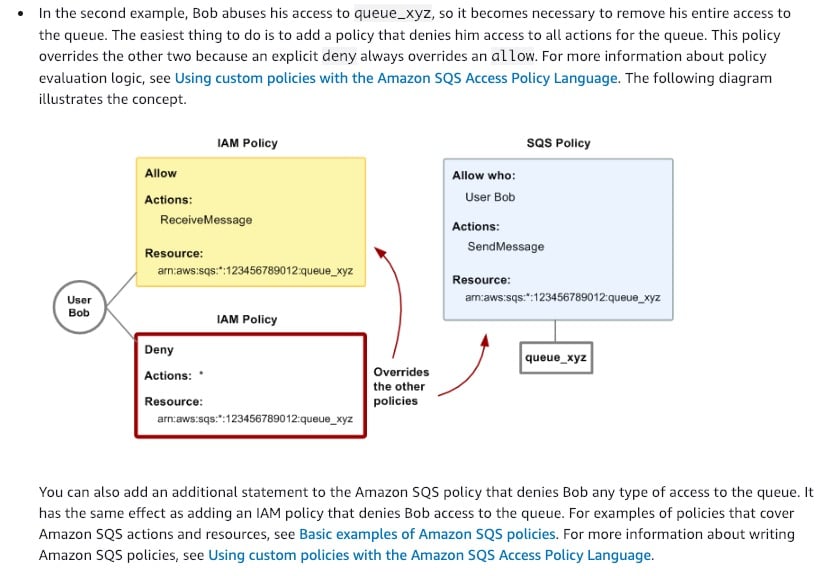

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates. CodePipeline automates the build, test, and deploy phases of your release process every time there is a code change, based on the release model you define.

How CodePipeline Works:

via – https://aws.amazon.com/codepipeline/

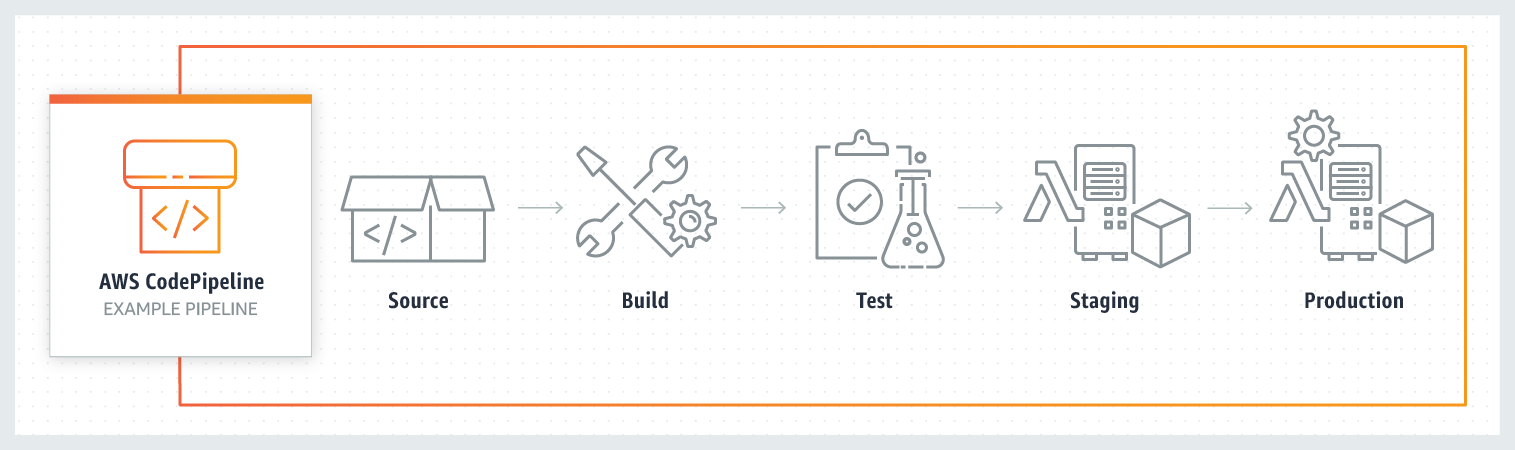

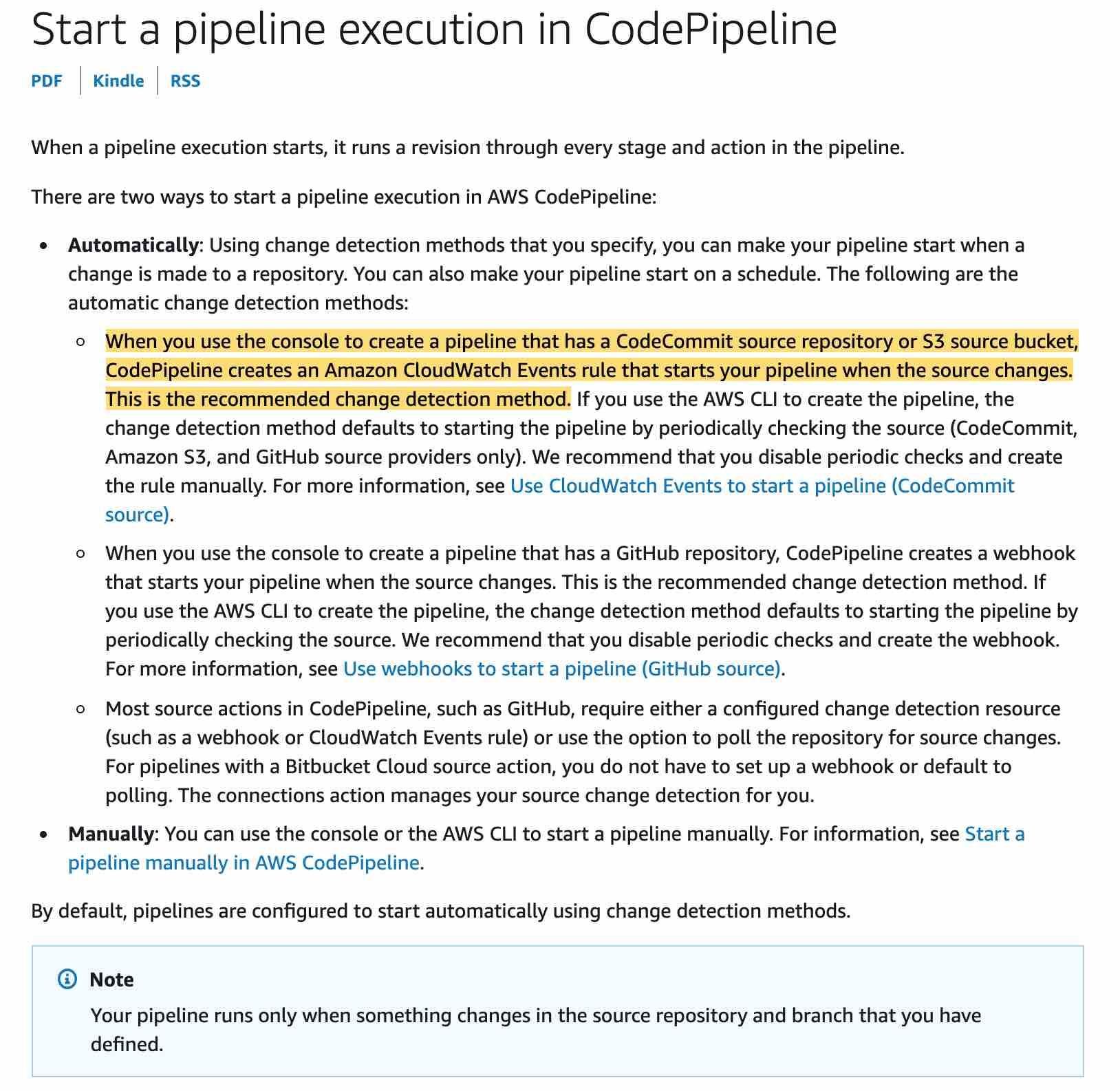

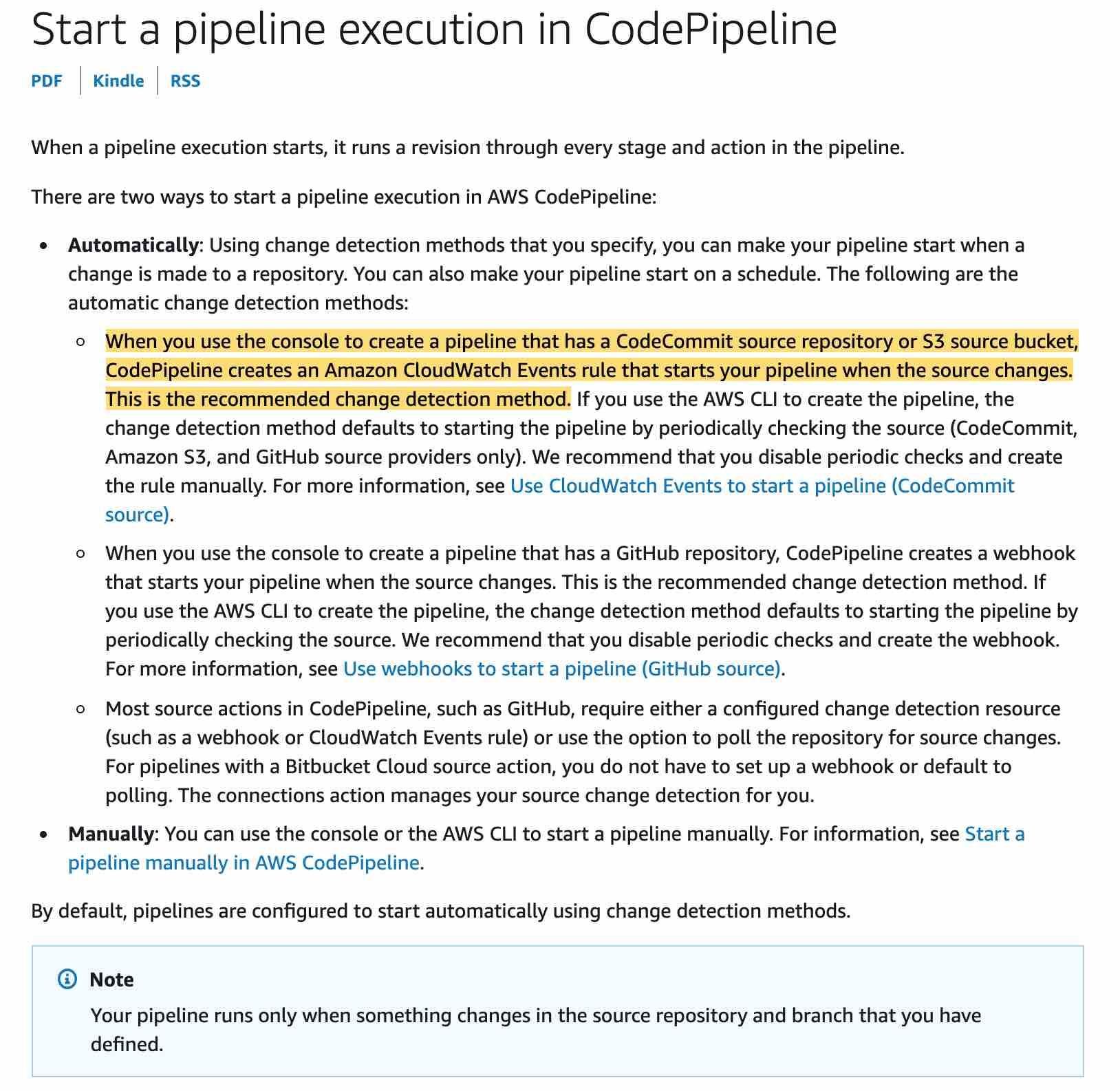

Using change detection methods that you specify, you can make your pipeline start when a change is made to a repository. You can also make your pipeline start on a schedule.

When you use the console to create a pipeline that has a CodeCommit source repository or S3 source bucket, CodePipeline creates an Amazon CloudWatch Events rule that starts your pipeline when the source changes. This is the recommended change detection method.

If you use the AWS CLI to create the pipeline, the change detection method defaults to starting the pipeline by periodically checking the source (CodeCommit, Amazon S3, and GitHub source providers only). AWS recommends that you disable periodic checks and create the rule manually.

via – https://docs.aws.amazon.com/codepipeline/latest/userguide/pipelines-about-starting.html

Incorrect options:

Keep the source code in Amazon EFS and start AWS CodePipeline whenever a file is updated

Keep the source code in an Amazon EBS volume and start AWS CodePipeline whenever there are updates to the source code

Both EFS and EBS are not supported as valid source providers for CodePipeline to check for any changes to the source code, hence these two options are incorrect.

Keep the source code in an Amazon S3 bucket and set up AWS CodePipeline to recur at an interval of every 15 minutes – As mentioned in the explanation above, although you could have the change detection method start the pipeline by periodically checking the S3 bucket, but this method is inefficient.

Reference:

https://docs.aws.amazon.com/codepipeline/latest/userguide/pipelines-about-starting.htmlIncorrect

Keep the source code in an AWS CodeCommit repository and start AWS CodePipeline whenever a change is pushed to the CodeCommit repository

Keep the source code in an Amazon S3 bucket and start AWS CodePipeline whenever a file in the S3 bucket is updated

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates. CodePipeline automates the build, test, and deploy phases of your release process every time there is a code change, based on the release model you define.

How CodePipeline Works:

via – https://aws.amazon.com/codepipeline/

Using change detection methods that you specify, you can make your pipeline start when a change is made to a repository. You can also make your pipeline start on a schedule.

When you use the console to create a pipeline that has a CodeCommit source repository or S3 source bucket, CodePipeline creates an Amazon CloudWatch Events rule that starts your pipeline when the source changes. This is the recommended change detection method.

If you use the AWS CLI to create the pipeline, the change detection method defaults to starting the pipeline by periodically checking the source (CodeCommit, Amazon S3, and GitHub source providers only). AWS recommends that you disable periodic checks and create the rule manually.

via – https://docs.aws.amazon.com/codepipeline/latest/userguide/pipelines-about-starting.html

Incorrect options:

Keep the source code in Amazon EFS and start AWS CodePipeline whenever a file is updated

Keep the source code in an Amazon EBS volume and start AWS CodePipeline whenever there are updates to the source code

Both EFS and EBS are not supported as valid source providers for CodePipeline to check for any changes to the source code, hence these two options are incorrect.

Keep the source code in an Amazon S3 bucket and set up AWS CodePipeline to recur at an interval of every 15 minutes – As mentioned in the explanation above, although you could have the change detection method start the pipeline by periodically checking the S3 bucket, but this method is inefficient.

Reference:

https://docs.aws.amazon.com/codepipeline/latest/userguide/pipelines-about-starting.htmlUnattempted

Keep the source code in an AWS CodeCommit repository and start AWS CodePipeline whenever a change is pushed to the CodeCommit repository

Keep the source code in an Amazon S3 bucket and start AWS CodePipeline whenever a file in the S3 bucket is updated

AWS CodePipeline is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates. CodePipeline automates the build, test, and deploy phases of your release process every time there is a code change, based on the release model you define.

How CodePipeline Works:

via – https://aws.amazon.com/codepipeline/

Using change detection methods that you specify, you can make your pipeline start when a change is made to a repository. You can also make your pipeline start on a schedule.

When you use the console to create a pipeline that has a CodeCommit source repository or S3 source bucket, CodePipeline creates an Amazon CloudWatch Events rule that starts your pipeline when the source changes. This is the recommended change detection method.

If you use the AWS CLI to create the pipeline, the change detection method defaults to starting the pipeline by periodically checking the source (CodeCommit, Amazon S3, and GitHub source providers only). AWS recommends that you disable periodic checks and create the rule manually.

via – https://docs.aws.amazon.com/codepipeline/latest/userguide/pipelines-about-starting.html

Incorrect options:

Keep the source code in Amazon EFS and start AWS CodePipeline whenever a file is updated

Keep the source code in an Amazon EBS volume and start AWS CodePipeline whenever there are updates to the source code

Both EFS and EBS are not supported as valid source providers for CodePipeline to check for any changes to the source code, hence these two options are incorrect.

Keep the source code in an Amazon S3 bucket and set up AWS CodePipeline to recur at an interval of every 15 minutes – As mentioned in the explanation above, although you could have the change detection method start the pipeline by periodically checking the S3 bucket, but this method is inefficient.

Reference:

https://docs.aws.amazon.com/codepipeline/latest/userguide/pipelines-about-starting.html -

Question 3 of 10

3. Question

A senior cloud engineer designs and deploys online fraud detection solutions for credit card companies processing millions of transactions daily. The Elastic Beanstalk application sends files to Amazon S3 and then sends a message to an Amazon SQS queue containing the path of the uploaded file in S3. The engineer wants to postpone the delivery of any new messages to the queue for at least 10 seconds.

Which SQS feature should the engineer leverage?Correct

Use DelaySeconds parameter

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. SQS offers two types of message queues. Standard queues offer maximum throughput, best-effort ordering, and at-least-once delivery. SQS FIFO queues are designed to guarantee that messages are processed exactly once, in the exact order that they are sent.

Delay queues let you postpone the delivery of new messages to a queue for several seconds, for example, when your consumer application needs additional time to process messages. If you create a delay queue, any messages that you send to the queue remain invisible to consumers for the duration of the delay period. The default (minimum) delay for a queue is 0 seconds. The maximum is 15 minutes.

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-delay-queues.html

Incorrect options:

Implement application-side delay – You can customize your application to delay sending messages but it is not a robust solution. You can run into a scenario where your application crashes before sending a message, then that message would be lost.

Use visibility timeout parameter – Visibility timeout is a period during which Amazon SQS prevents other consumers from receiving and processing a given message. The default visibility timeout for a message is 30 seconds. The minimum is 0 seconds. The maximum is 12 hours. You cannot use visibility timeout to postpone the delivery of new messages to the queue for a few seconds.

Enable LongPolling – Long polling makes it inexpensive to retrieve messages from your Amazon SQS queue as soon as the messages are available. Long polling helps reduce the cost of using Amazon SQS by eliminating the number of empty responses (when there are no messages available for a ReceiveMessage request) and false empty responses (when messages are available but aren‘t included in a response). When the wait time for the ReceiveMessage API action is greater than 0, long polling is in effect. The maximum long polling wait time is 20 seconds. You cannot use LongPolling to postpone the delivery of new messages to the queue for a few seconds.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-delay-queues.htmlIncorrect

Use DelaySeconds parameter

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. SQS offers two types of message queues. Standard queues offer maximum throughput, best-effort ordering, and at-least-once delivery. SQS FIFO queues are designed to guarantee that messages are processed exactly once, in the exact order that they are sent.

Delay queues let you postpone the delivery of new messages to a queue for several seconds, for example, when your consumer application needs additional time to process messages. If you create a delay queue, any messages that you send to the queue remain invisible to consumers for the duration of the delay period. The default (minimum) delay for a queue is 0 seconds. The maximum is 15 minutes.

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-delay-queues.html

Incorrect options:

Implement application-side delay – You can customize your application to delay sending messages but it is not a robust solution. You can run into a scenario where your application crashes before sending a message, then that message would be lost.

Use visibility timeout parameter – Visibility timeout is a period during which Amazon SQS prevents other consumers from receiving and processing a given message. The default visibility timeout for a message is 30 seconds. The minimum is 0 seconds. The maximum is 12 hours. You cannot use visibility timeout to postpone the delivery of new messages to the queue for a few seconds.

Enable LongPolling – Long polling makes it inexpensive to retrieve messages from your Amazon SQS queue as soon as the messages are available. Long polling helps reduce the cost of using Amazon SQS by eliminating the number of empty responses (when there are no messages available for a ReceiveMessage request) and false empty responses (when messages are available but aren‘t included in a response). When the wait time for the ReceiveMessage API action is greater than 0, long polling is in effect. The maximum long polling wait time is 20 seconds. You cannot use LongPolling to postpone the delivery of new messages to the queue for a few seconds.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-delay-queues.htmlUnattempted

Use DelaySeconds parameter

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. SQS offers two types of message queues. Standard queues offer maximum throughput, best-effort ordering, and at-least-once delivery. SQS FIFO queues are designed to guarantee that messages are processed exactly once, in the exact order that they are sent.

Delay queues let you postpone the delivery of new messages to a queue for several seconds, for example, when your consumer application needs additional time to process messages. If you create a delay queue, any messages that you send to the queue remain invisible to consumers for the duration of the delay period. The default (minimum) delay for a queue is 0 seconds. The maximum is 15 minutes.

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-delay-queues.html

Incorrect options:

Implement application-side delay – You can customize your application to delay sending messages but it is not a robust solution. You can run into a scenario where your application crashes before sending a message, then that message would be lost.

Use visibility timeout parameter – Visibility timeout is a period during which Amazon SQS prevents other consumers from receiving and processing a given message. The default visibility timeout for a message is 30 seconds. The minimum is 0 seconds. The maximum is 12 hours. You cannot use visibility timeout to postpone the delivery of new messages to the queue for a few seconds.

Enable LongPolling – Long polling makes it inexpensive to retrieve messages from your Amazon SQS queue as soon as the messages are available. Long polling helps reduce the cost of using Amazon SQS by eliminating the number of empty responses (when there are no messages available for a ReceiveMessage request) and false empty responses (when messages are available but aren‘t included in a response). When the wait time for the ReceiveMessage API action is greater than 0, long polling is in effect. The maximum long polling wait time is 20 seconds. You cannot use LongPolling to postpone the delivery of new messages to the queue for a few seconds.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-delay-queues.html -

Question 4 of 10

4. Question

A developer is configuring an Application Load Balancer (ALB) to direct traffic to the application‘s EC2 instances and Lambda functions.

Which of the following characteristics of the ALB can be identified as correct? (Select two)Correct

An ALB has three possible target types: Instance, IP and Lambda

When you create a target group, you specify its target type, which determines the type of target you specify when registering targets with this target group. After you create a target group, you cannot change its target type. The following are the possible target types:

1. Instance – The targets are specified by instance ID

2. IP – The targets are IP addresses

3. Lambda – The target is a Lambda function

You can not specify publicly routable IP addresses to an ALB

When the target type is IP, you can specify IP addresses from specific CIDR blocks only. You can‘t specify publicly routable IP addresses.

Incorrect options:

If you specify targets using an instance ID, traffic is routed to instances using any private IP address from one or more network interfaces – If you specify targets using an instance ID, traffic is routed to instances using the primary private IP address specified in the primary network interface for the instance.

If you specify targets using IP addresses, traffic is routed to instances using the primary private IP address – If you specify targets using IP addresses, you can route traffic to an instance using any private IP address from one or more network interfaces. This enables multiple applications on an instance to use the same port.

An ALB has three possible target types: Hostname, IP and Lambda – This is incorrect, as described in the correct explanation above.

Reference:

https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-target-groups.htmlIncorrect

An ALB has three possible target types: Instance, IP and Lambda

When you create a target group, you specify its target type, which determines the type of target you specify when registering targets with this target group. After you create a target group, you cannot change its target type. The following are the possible target types:

1. Instance – The targets are specified by instance ID

2. IP – The targets are IP addresses

3. Lambda – The target is a Lambda function

You can not specify publicly routable IP addresses to an ALB

When the target type is IP, you can specify IP addresses from specific CIDR blocks only. You can‘t specify publicly routable IP addresses.

Incorrect options:

If you specify targets using an instance ID, traffic is routed to instances using any private IP address from one or more network interfaces – If you specify targets using an instance ID, traffic is routed to instances using the primary private IP address specified in the primary network interface for the instance.

If you specify targets using IP addresses, traffic is routed to instances using the primary private IP address – If you specify targets using IP addresses, you can route traffic to an instance using any private IP address from one or more network interfaces. This enables multiple applications on an instance to use the same port.

An ALB has three possible target types: Hostname, IP and Lambda – This is incorrect, as described in the correct explanation above.

Reference:

https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-target-groups.htmlUnattempted

An ALB has three possible target types: Instance, IP and Lambda

When you create a target group, you specify its target type, which determines the type of target you specify when registering targets with this target group. After you create a target group, you cannot change its target type. The following are the possible target types:

1. Instance – The targets are specified by instance ID

2. IP – The targets are IP addresses

3. Lambda – The target is a Lambda function

You can not specify publicly routable IP addresses to an ALB

When the target type is IP, you can specify IP addresses from specific CIDR blocks only. You can‘t specify publicly routable IP addresses.

Incorrect options:

If you specify targets using an instance ID, traffic is routed to instances using any private IP address from one or more network interfaces – If you specify targets using an instance ID, traffic is routed to instances using the primary private IP address specified in the primary network interface for the instance.

If you specify targets using IP addresses, traffic is routed to instances using the primary private IP address – If you specify targets using IP addresses, you can route traffic to an instance using any private IP address from one or more network interfaces. This enables multiple applications on an instance to use the same port.

An ALB has three possible target types: Hostname, IP and Lambda – This is incorrect, as described in the correct explanation above.

Reference:

https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-target-groups.html -

Question 5 of 10

5. Question

A firm maintains a highly available application that receives HTTPS traffic from mobile devices and web browsers. The main Developer would like to set up the Load Balancer routing to route traffic from web servers to smart.com/api and from mobile devices to smart.com/mobile. A developer advises that the previous recommendation is not needed and that requests should be sent to api.smart.com and mobile.smart.com instead.

Which of the following routing options were discussed in the given use-case? (select two)Correct

Path based

You can create a listener with rules to forward requests based on the URL path. This is known as path-based routing. If you are running microservices, you can route traffic to multiple back-end services using path-based routing. For example, you can route general requests to one target group and request to render images to another target group.

This path-based routing allows you to route requests to, for example, /api to one set of servers (also known as target groups) and /mobile to another set. Segmenting your traffic in this way gives you the ability to control the processing environment for each category of requests. Perhaps /api requests are best processed on Compute Optimized instances, while /mobile requests are best handled by Memory Optimized instances.

Host based

You can create Application Load Balancer rules that route incoming traffic based on the domain name specified in the Host header. Requests to api.example.com can be sent to one target group, requests to mobile.example.com to another, and all others (by way of a default rule) can be sent to a third. You can also create rules that combine host-based routing and path-based routing. This would allow you to route requests to api.example.com/production and api.example.com/sandbox to distinct target groups.

via – https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-listeners.html#rule-condition-types

Incorrect options:

Client IP – This option has been added as a distractor. Routing is not based on the client‘s IP address.

Web browser version – Routing has nothing to do with the client‘s web browser, if it was then there is something sneaky going on.

Cookie value – Application Load Balancers support load balancer-generated cookies only and you cannot modify them. When routing sticky sessions to route requests to the same target then cookies are needed to be supported by the client‘s browser.

Reference:

https://aws.amazon.com/blogs/aws/new-host-based-routing-support-for-aws-application-load-balancers/Incorrect

Path based

You can create a listener with rules to forward requests based on the URL path. This is known as path-based routing. If you are running microservices, you can route traffic to multiple back-end services using path-based routing. For example, you can route general requests to one target group and request to render images to another target group.

This path-based routing allows you to route requests to, for example, /api to one set of servers (also known as target groups) and /mobile to another set. Segmenting your traffic in this way gives you the ability to control the processing environment for each category of requests. Perhaps /api requests are best processed on Compute Optimized instances, while /mobile requests are best handled by Memory Optimized instances.

Host based

You can create Application Load Balancer rules that route incoming traffic based on the domain name specified in the Host header. Requests to api.example.com can be sent to one target group, requests to mobile.example.com to another, and all others (by way of a default rule) can be sent to a third. You can also create rules that combine host-based routing and path-based routing. This would allow you to route requests to api.example.com/production and api.example.com/sandbox to distinct target groups.

via – https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-listeners.html#rule-condition-types

Incorrect options:

Client IP – This option has been added as a distractor. Routing is not based on the client‘s IP address.

Web browser version – Routing has nothing to do with the client‘s web browser, if it was then there is something sneaky going on.

Cookie value – Application Load Balancers support load balancer-generated cookies only and you cannot modify them. When routing sticky sessions to route requests to the same target then cookies are needed to be supported by the client‘s browser.

Reference:

https://aws.amazon.com/blogs/aws/new-host-based-routing-support-for-aws-application-load-balancers/Unattempted

Path based

You can create a listener with rules to forward requests based on the URL path. This is known as path-based routing. If you are running microservices, you can route traffic to multiple back-end services using path-based routing. For example, you can route general requests to one target group and request to render images to another target group.

This path-based routing allows you to route requests to, for example, /api to one set of servers (also known as target groups) and /mobile to another set. Segmenting your traffic in this way gives you the ability to control the processing environment for each category of requests. Perhaps /api requests are best processed on Compute Optimized instances, while /mobile requests are best handled by Memory Optimized instances.

Host based

You can create Application Load Balancer rules that route incoming traffic based on the domain name specified in the Host header. Requests to api.example.com can be sent to one target group, requests to mobile.example.com to another, and all others (by way of a default rule) can be sent to a third. You can also create rules that combine host-based routing and path-based routing. This would allow you to route requests to api.example.com/production and api.example.com/sandbox to distinct target groups.

via – https://docs.aws.amazon.com/elasticloadbalancing/latest/application/load-balancer-listeners.html#rule-condition-types

Incorrect options:

Client IP – This option has been added as a distractor. Routing is not based on the client‘s IP address.

Web browser version – Routing has nothing to do with the client‘s web browser, if it was then there is something sneaky going on.

Cookie value – Application Load Balancers support load balancer-generated cookies only and you cannot modify them. When routing sticky sessions to route requests to the same target then cookies are needed to be supported by the client‘s browser.

Reference:

https://aws.amazon.com/blogs/aws/new-host-based-routing-support-for-aws-application-load-balancers/ -

Question 6 of 10

6. Question

An IT company has a web application running on Amazon EC2 instances that needs read-only access to an Amazon DynamoDB table.

As a Developer Associate, what is the best-practice solution you would recommend to accomplish this task?Correct

Create an IAM role with an AmazonDynamoDBReadOnlyAccess policy and apply it to the EC2 instance profile

As an AWS security best practice, you should not create an IAM user and pass the user‘s credentials to the application or embed the credentials in the application. Instead, create an IAM role that you attach to the EC2 instance to give temporary security credentials to applications running on the instance. When an application uses these credentials in AWS, it can perform all of the operations that are allowed by the policies attached to the role.

So for the given use-case, you should create an IAM role with an AmazonDynamoDBReadOnlyAccess policy and apply it to the EC2 instance profile.

via – https://docs.aws.amazon.com/IAM/latest/UserGuide/id.html

Incorrect options:

Create a new IAM user with access keys. Attach an inline policy to the IAM user with read-only access to DynamoDB. Place the keys in the code. For security, redeploy the code whenever the keys rotate

Create an IAM user with Administrator access and configure AWS credentials for this user on the given EC2 instance

Run application code with AWS account root user credentials to ensure full access to all AWS services

As mentioned in the explanation above, it is dangerous to pass an IAM user‘s credentials to the application or embed the credentials in the application. The security implications are even higher when you use an IAM user with admin privileges or use the AWS account root user. So all three options are incorrect.

Reference:

https://docs.aws.amazon.com/IAM/latest/UserGuide/id.htmlIncorrect

Create an IAM role with an AmazonDynamoDBReadOnlyAccess policy and apply it to the EC2 instance profile

As an AWS security best practice, you should not create an IAM user and pass the user‘s credentials to the application or embed the credentials in the application. Instead, create an IAM role that you attach to the EC2 instance to give temporary security credentials to applications running on the instance. When an application uses these credentials in AWS, it can perform all of the operations that are allowed by the policies attached to the role.

So for the given use-case, you should create an IAM role with an AmazonDynamoDBReadOnlyAccess policy and apply it to the EC2 instance profile.

via – https://docs.aws.amazon.com/IAM/latest/UserGuide/id.html

Incorrect options:

Create a new IAM user with access keys. Attach an inline policy to the IAM user with read-only access to DynamoDB. Place the keys in the code. For security, redeploy the code whenever the keys rotate

Create an IAM user with Administrator access and configure AWS credentials for this user on the given EC2 instance

Run application code with AWS account root user credentials to ensure full access to all AWS services

As mentioned in the explanation above, it is dangerous to pass an IAM user‘s credentials to the application or embed the credentials in the application. The security implications are even higher when you use an IAM user with admin privileges or use the AWS account root user. So all three options are incorrect.

Reference:

https://docs.aws.amazon.com/IAM/latest/UserGuide/id.htmlUnattempted

Create an IAM role with an AmazonDynamoDBReadOnlyAccess policy and apply it to the EC2 instance profile

As an AWS security best practice, you should not create an IAM user and pass the user‘s credentials to the application or embed the credentials in the application. Instead, create an IAM role that you attach to the EC2 instance to give temporary security credentials to applications running on the instance. When an application uses these credentials in AWS, it can perform all of the operations that are allowed by the policies attached to the role.

So for the given use-case, you should create an IAM role with an AmazonDynamoDBReadOnlyAccess policy and apply it to the EC2 instance profile.

via – https://docs.aws.amazon.com/IAM/latest/UserGuide/id.html

Incorrect options:

Create a new IAM user with access keys. Attach an inline policy to the IAM user with read-only access to DynamoDB. Place the keys in the code. For security, redeploy the code whenever the keys rotate

Create an IAM user with Administrator access and configure AWS credentials for this user on the given EC2 instance

Run application code with AWS account root user credentials to ensure full access to all AWS services

As mentioned in the explanation above, it is dangerous to pass an IAM user‘s credentials to the application or embed the credentials in the application. The security implications are even higher when you use an IAM user with admin privileges or use the AWS account root user. So all three options are incorrect.

Reference:

https://docs.aws.amazon.com/IAM/latest/UserGuide/id.html -

Question 7 of 10

7. Question

After reviewing your monthly AWS bill you notice that the cost of using Amazon SQS has gone up substantially after creating new queues; however, you know that your queue clients do not have a lot of traffic and are receiving empty responses.

Which of the following actions should you take?Correct

Use LongPolling

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications.

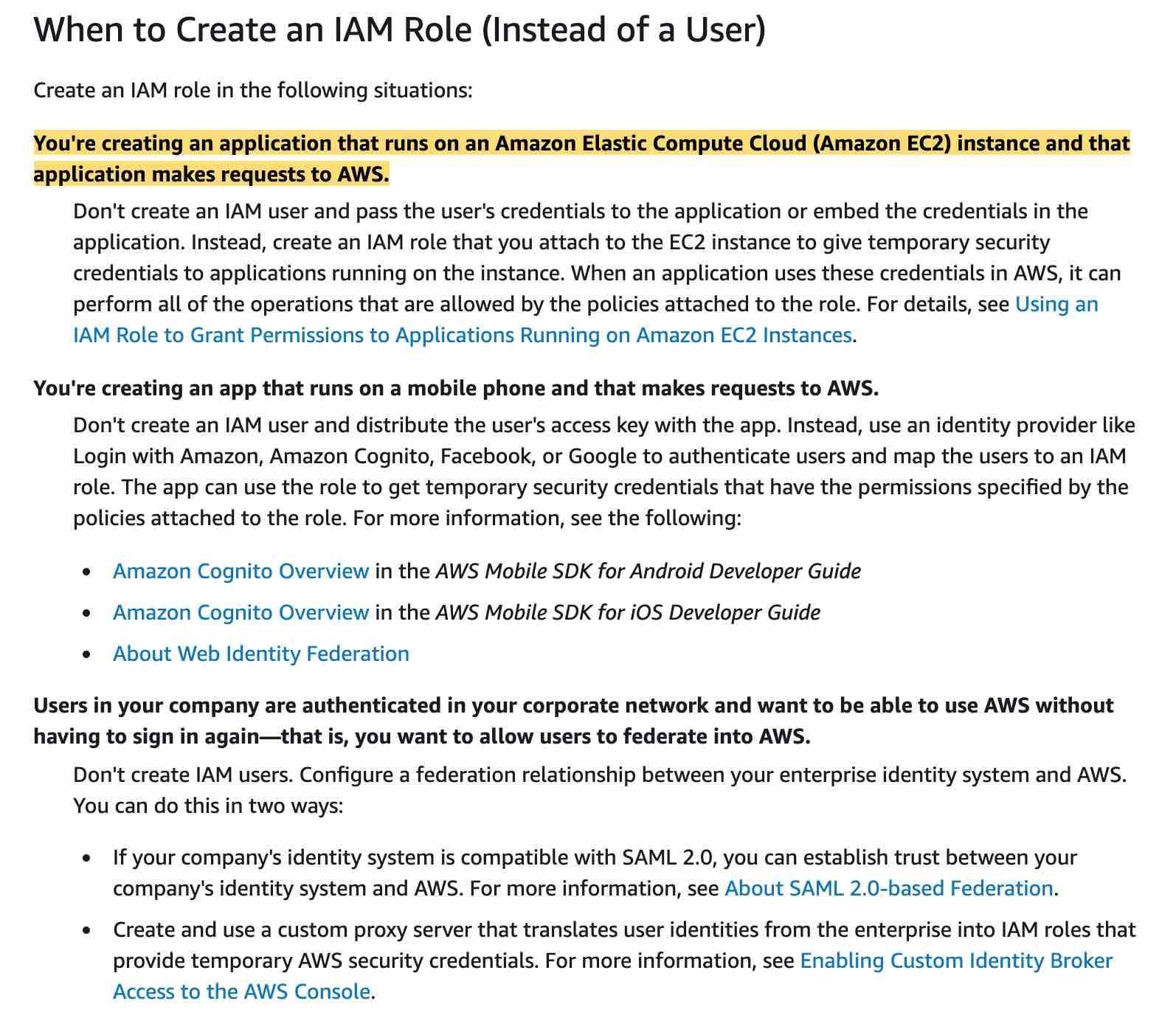

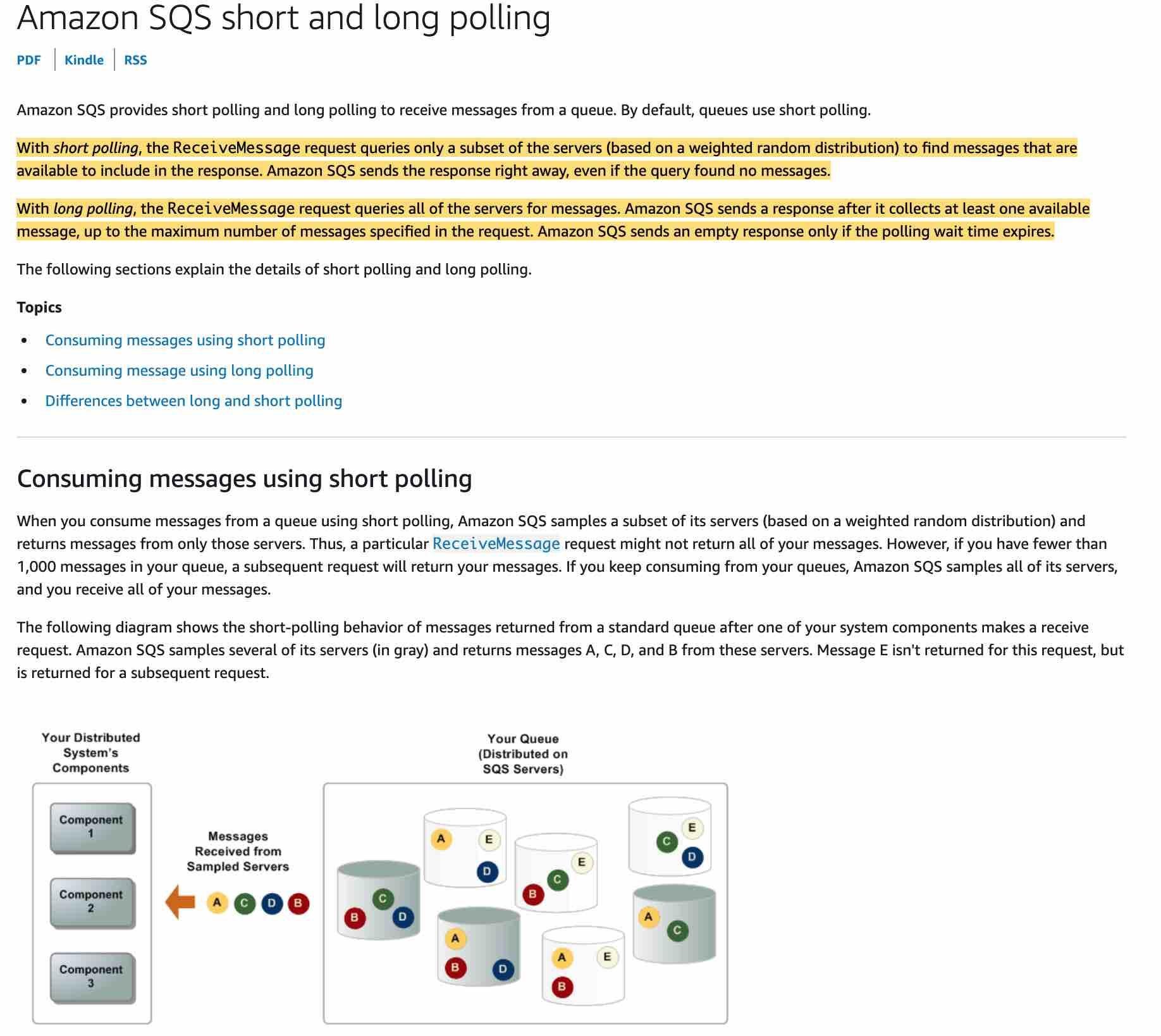

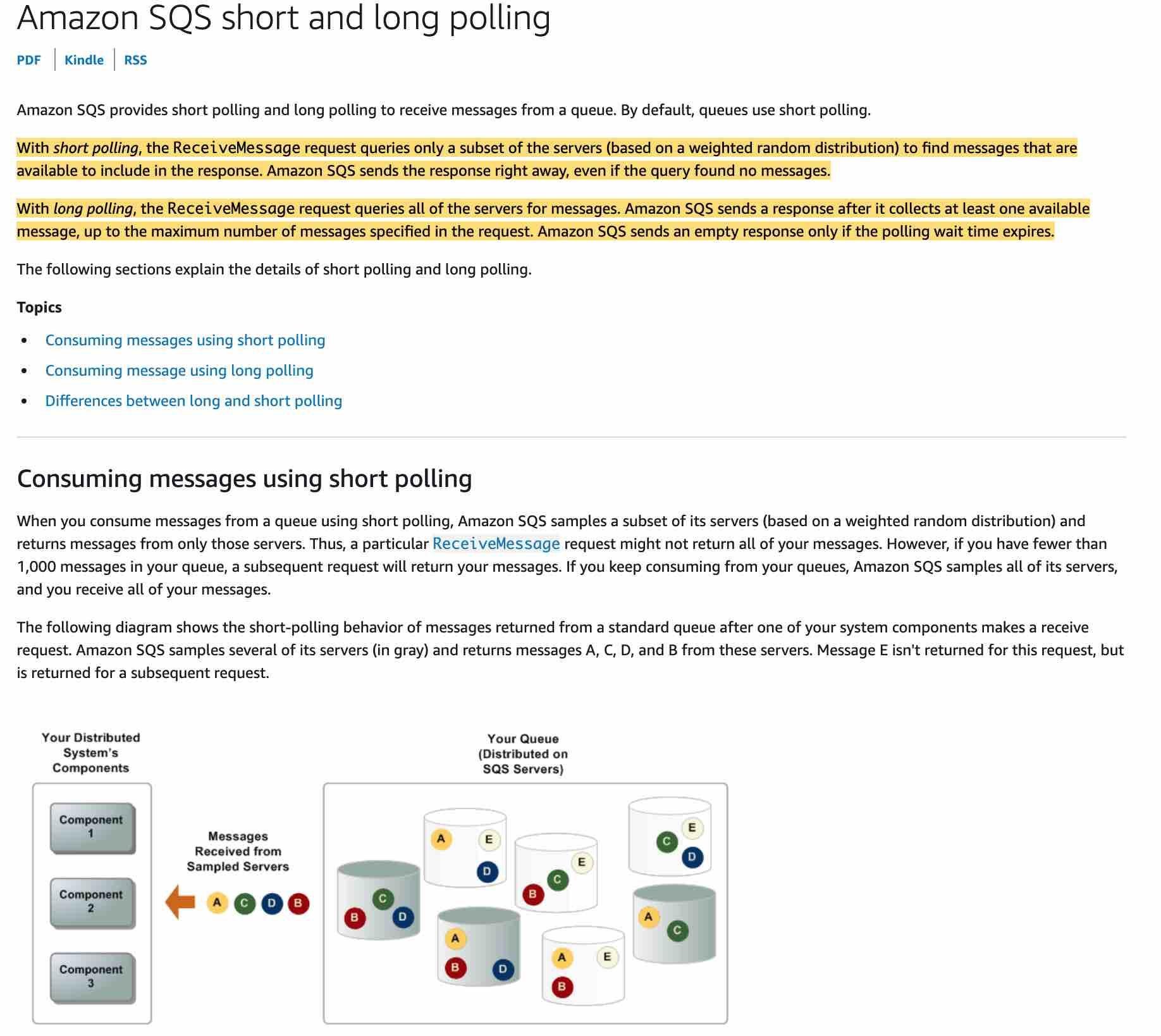

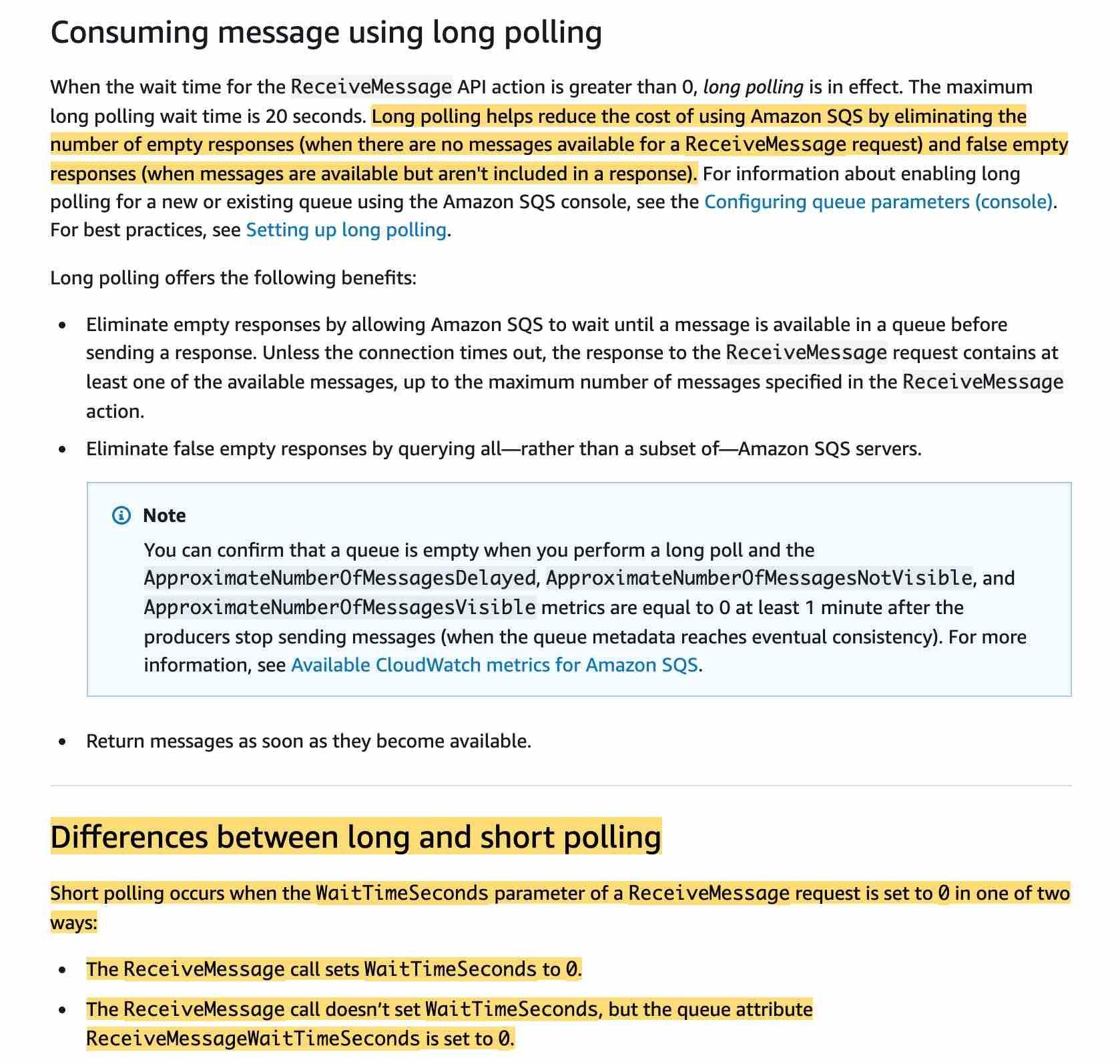

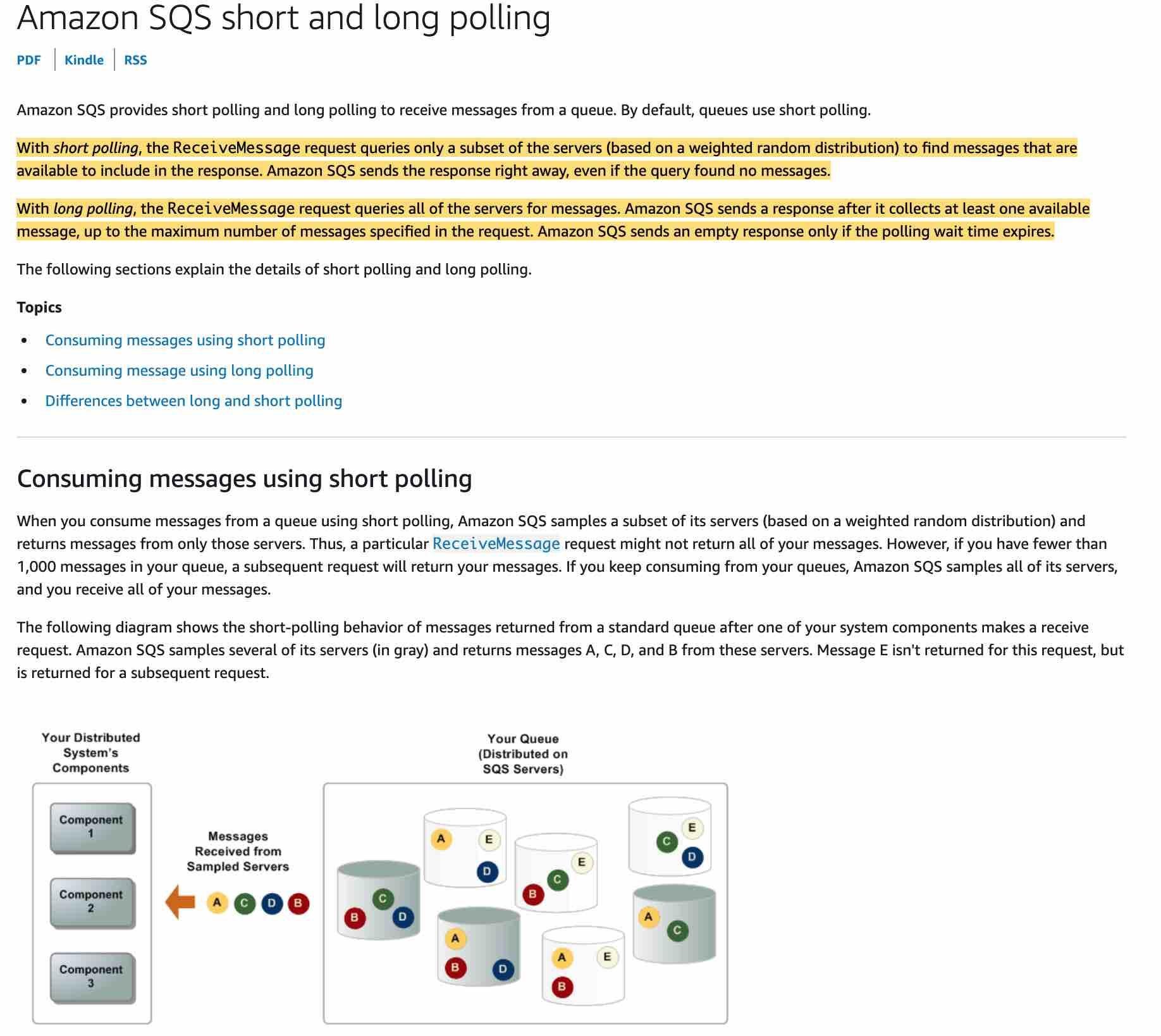

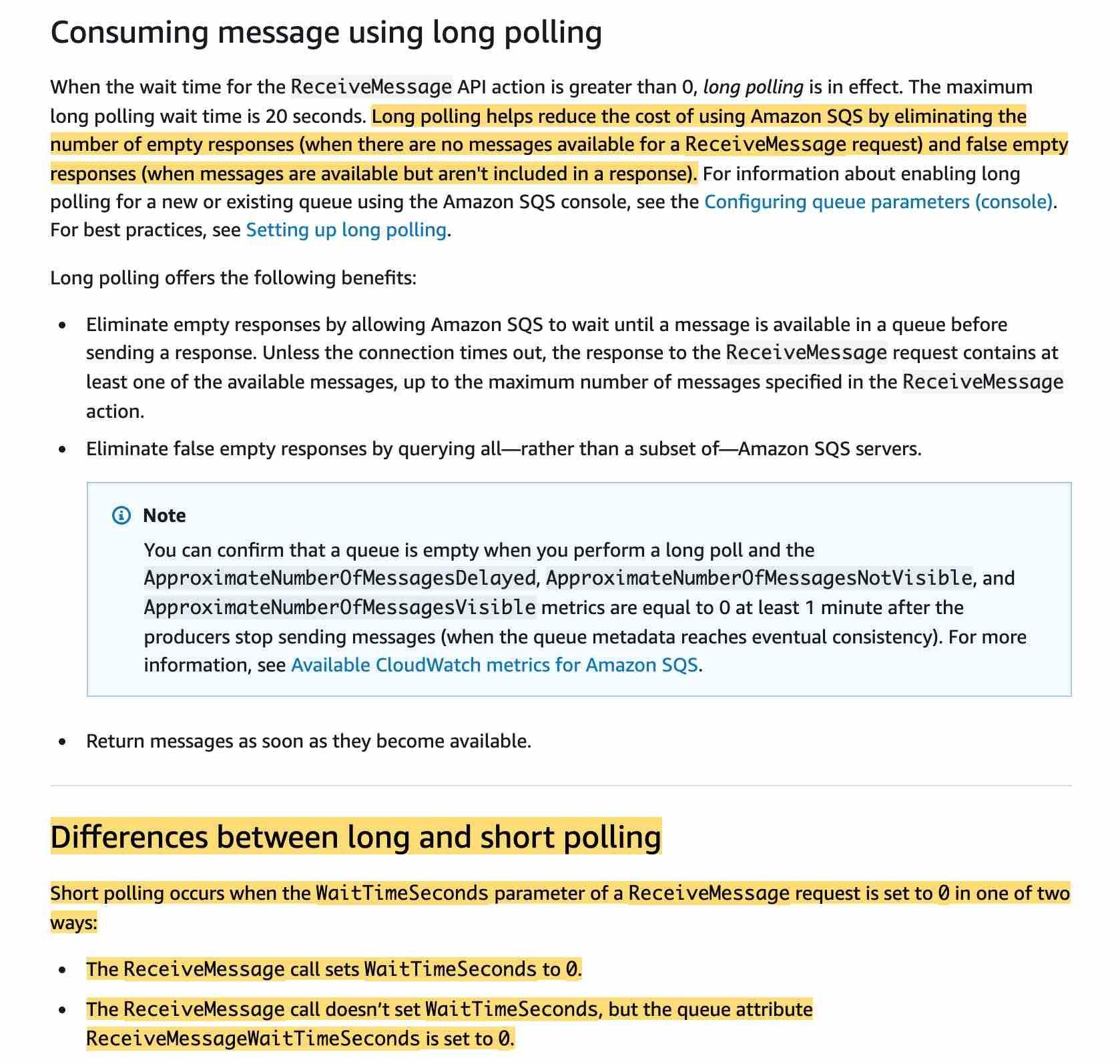

Amazon SQS provides short polling and long polling to receive messages from a queue. By default, queues use short polling. With short polling, Amazon SQS sends the response right away, even if the query found no messages. With long polling, Amazon SQS sends a response after it collects at least one available message, up to the maximum number of messages specified in the request. Amazon SQS sends an empty response only if the polling wait time expires.

Long polling makes it inexpensive to retrieve messages from your Amazon SQS queue as soon as the messages are available. Long polling helps reduce the cost of using Amazon SQS by eliminating the number of empty responses (when there are no messages available for a ReceiveMessage request) and false empty responses (when messages are available but aren‘t included in a response). When the wait time for the ReceiveMessage API action is greater than 0, long polling is in effect. The maximum long polling wait time is 20 seconds.

Exam Alert:

Please review the differences between Short Polling vs Long Polling:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html

Incorrect options:

Increase the VisibilityTimeout – Because there is no guarantee that a consumer received a message, the consumer must delete it. To prevent other consumers from processing the message again, Amazon SQS sets a visibility timeout. Visibility timeout will not help with cost reduction.

Use a FIFO queue – FIFO queues are designed to enhance messaging between applications when the order of operations and events has to be enforced. FIFO queues will not help with cost reduction. In fact, they are costlier than standard queues.

Decrease DelaySeconds – This is similar to VisibilityTimeout. The difference is that a message is hidden when it is first added to a queue for DelaySeconds, whereas for visibility timeouts a message is hidden only after it is consumed from the queue. DelaySeconds will not help with cost reduction.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.htmlIncorrect

Use LongPolling

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications.

Amazon SQS provides short polling and long polling to receive messages from a queue. By default, queues use short polling. With short polling, Amazon SQS sends the response right away, even if the query found no messages. With long polling, Amazon SQS sends a response after it collects at least one available message, up to the maximum number of messages specified in the request. Amazon SQS sends an empty response only if the polling wait time expires.

Long polling makes it inexpensive to retrieve messages from your Amazon SQS queue as soon as the messages are available. Long polling helps reduce the cost of using Amazon SQS by eliminating the number of empty responses (when there are no messages available for a ReceiveMessage request) and false empty responses (when messages are available but aren‘t included in a response). When the wait time for the ReceiveMessage API action is greater than 0, long polling is in effect. The maximum long polling wait time is 20 seconds.

Exam Alert:

Please review the differences between Short Polling vs Long Polling:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html

Incorrect options:

Increase the VisibilityTimeout – Because there is no guarantee that a consumer received a message, the consumer must delete it. To prevent other consumers from processing the message again, Amazon SQS sets a visibility timeout. Visibility timeout will not help with cost reduction.

Use a FIFO queue – FIFO queues are designed to enhance messaging between applications when the order of operations and events has to be enforced. FIFO queues will not help with cost reduction. In fact, they are costlier than standard queues.

Decrease DelaySeconds – This is similar to VisibilityTimeout. The difference is that a message is hidden when it is first added to a queue for DelaySeconds, whereas for visibility timeouts a message is hidden only after it is consumed from the queue. DelaySeconds will not help with cost reduction.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.htmlUnattempted

Use LongPolling

Amazon Simple Queue Service (SQS) is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications.

Amazon SQS provides short polling and long polling to receive messages from a queue. By default, queues use short polling. With short polling, Amazon SQS sends the response right away, even if the query found no messages. With long polling, Amazon SQS sends a response after it collects at least one available message, up to the maximum number of messages specified in the request. Amazon SQS sends an empty response only if the polling wait time expires.

Long polling makes it inexpensive to retrieve messages from your Amazon SQS queue as soon as the messages are available. Long polling helps reduce the cost of using Amazon SQS by eliminating the number of empty responses (when there are no messages available for a ReceiveMessage request) and false empty responses (when messages are available but aren‘t included in a response). When the wait time for the ReceiveMessage API action is greater than 0, long polling is in effect. The maximum long polling wait time is 20 seconds.

Exam Alert:

Please review the differences between Short Polling vs Long Polling:

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html

via – https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html

Incorrect options:

Increase the VisibilityTimeout – Because there is no guarantee that a consumer received a message, the consumer must delete it. To prevent other consumers from processing the message again, Amazon SQS sets a visibility timeout. Visibility timeout will not help with cost reduction.

Use a FIFO queue – FIFO queues are designed to enhance messaging between applications when the order of operations and events has to be enforced. FIFO queues will not help with cost reduction. In fact, they are costlier than standard queues.

Decrease DelaySeconds – This is similar to VisibilityTimeout. The difference is that a message is hidden when it is first added to a queue for DelaySeconds, whereas for visibility timeouts a message is hidden only after it is consumed from the queue. DelaySeconds will not help with cost reduction.

Reference:

https://docs.aws.amazon.com/AWSSimpleQueueService/latest/SQSDeveloperGuide/sqs-short-and-long-polling.html -

Question 8 of 10

8. Question

You are planning to build a fleet of EBS-optimized EC2 instances to handle the load of your new application. Due to security compliance, your organization wants any secret strings used in the application to be encrypted to prevent exposing values as clear text.

The solution requires that decryption events be audited and API calls to be simple. How can this be achieved? (select two)Correct

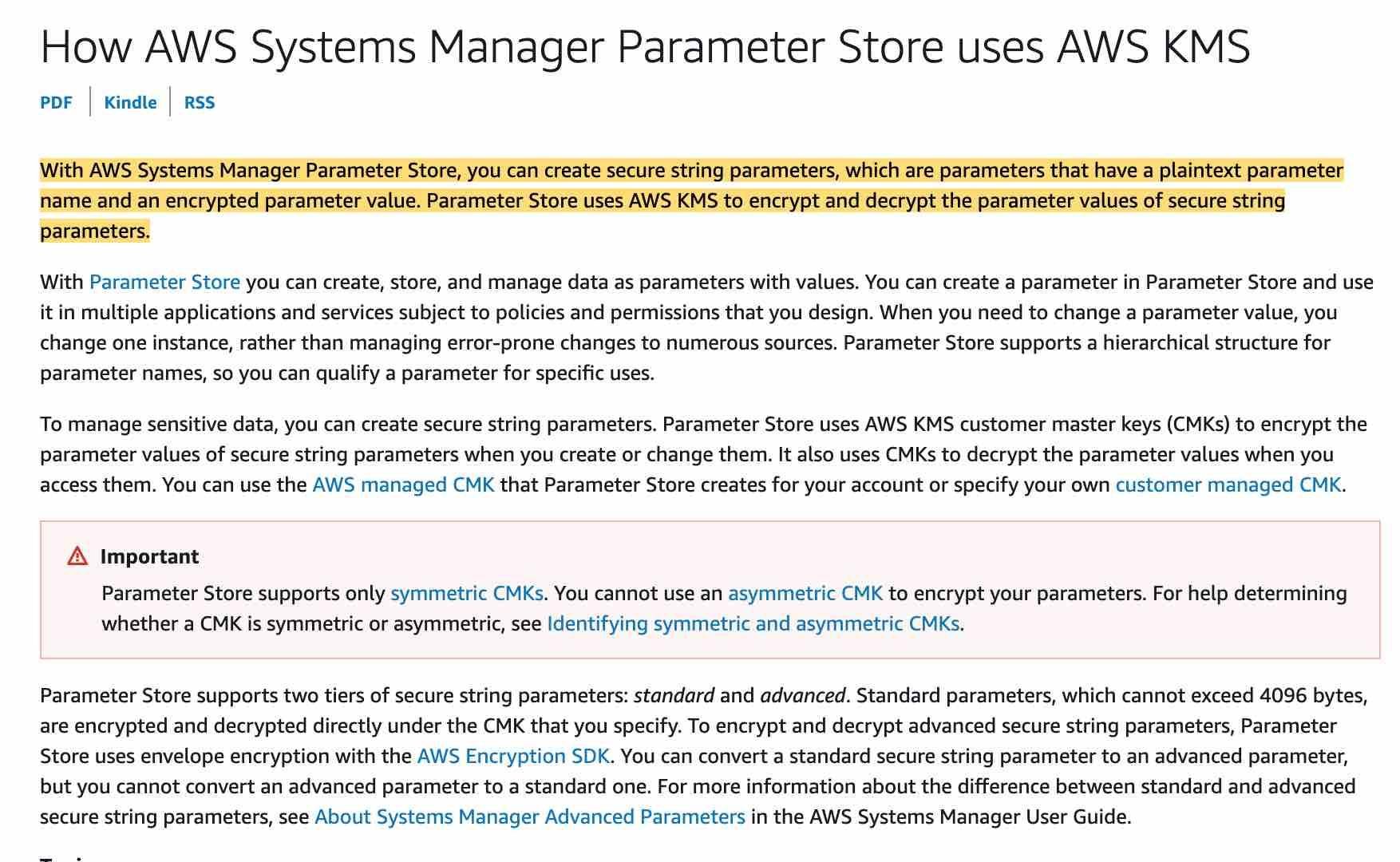

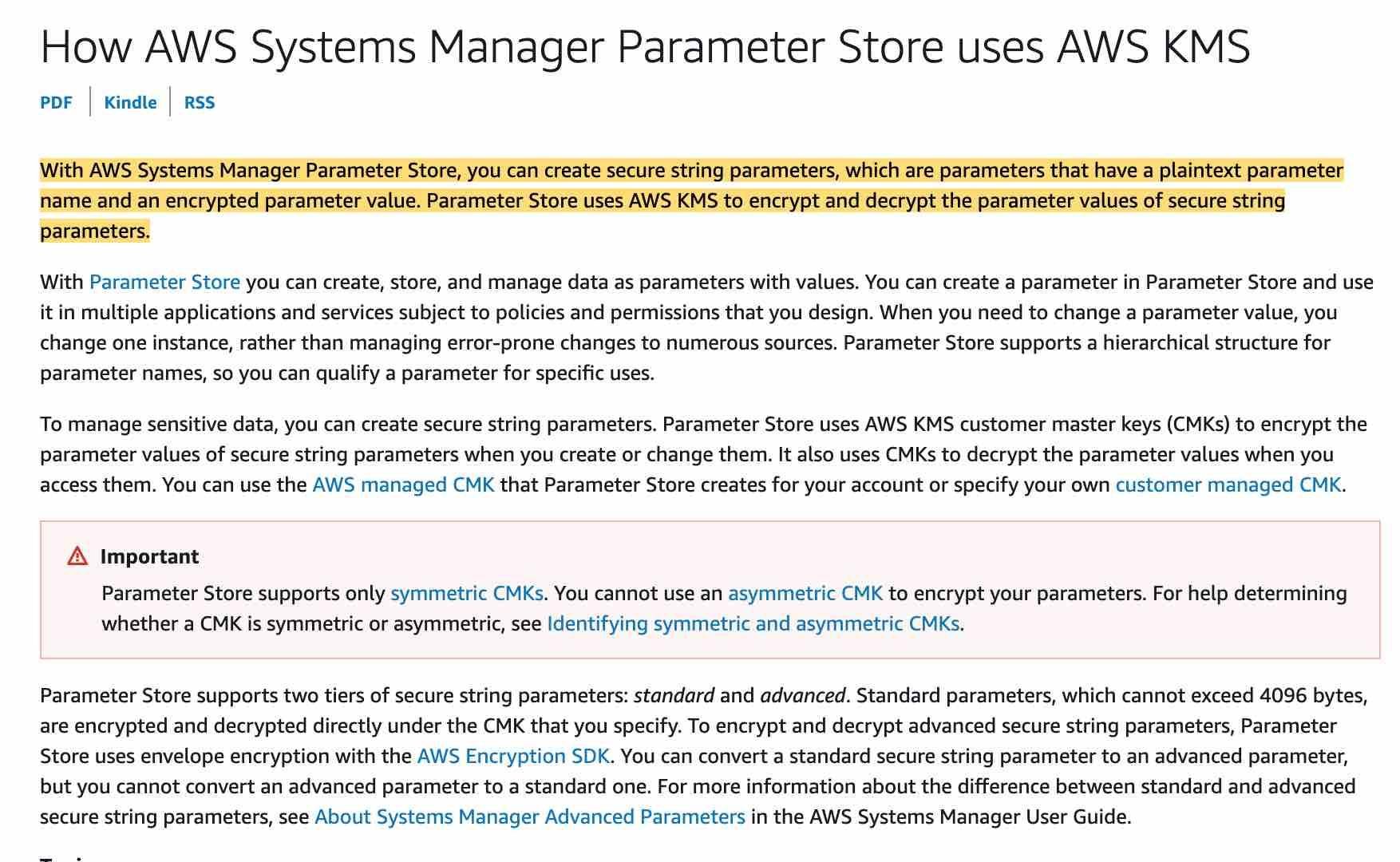

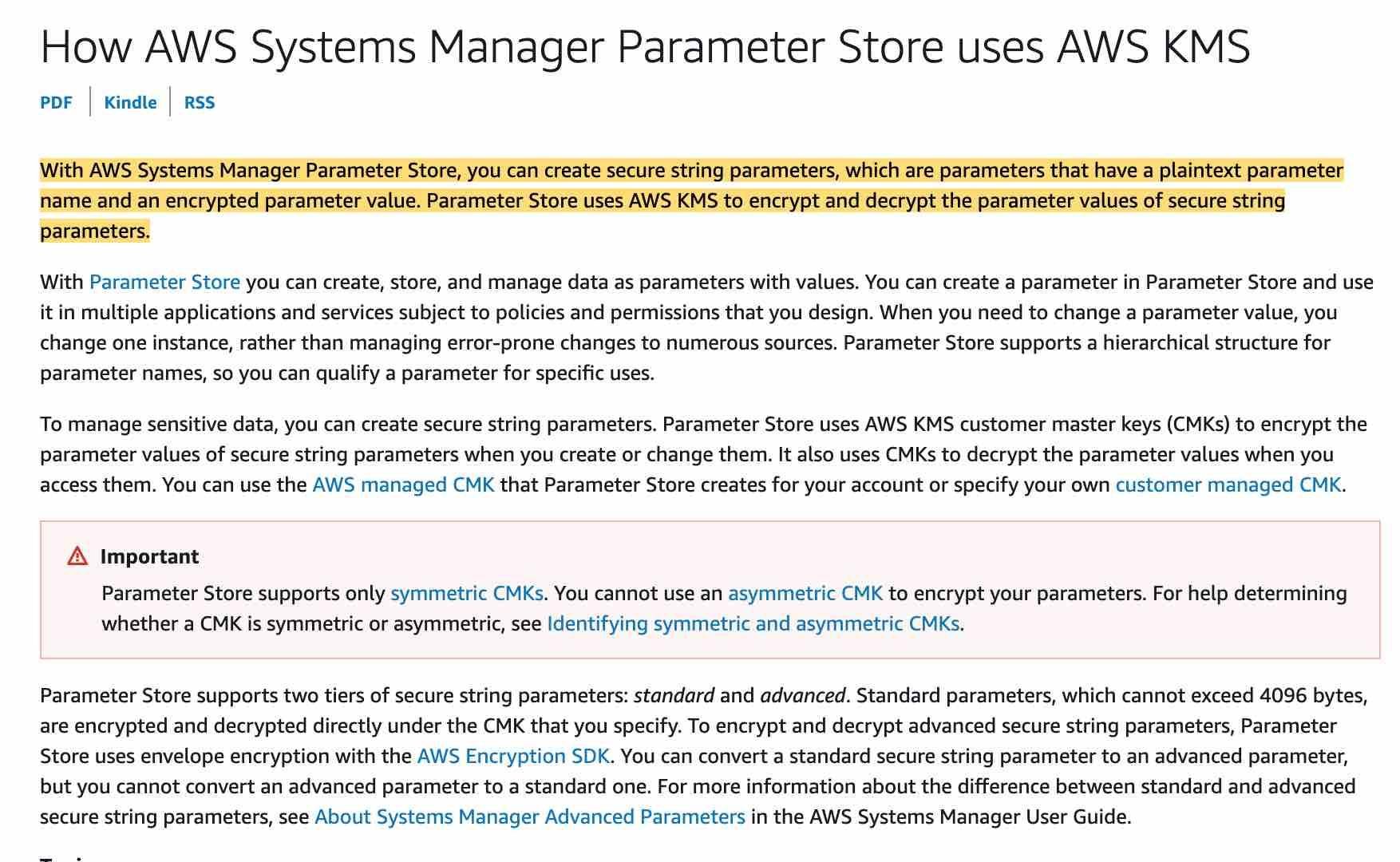

Store the secret as SecureString in SSM Parameter Store

With AWS Systems Manager Parameter Store, you can create SecureString parameters, which are parameters that have a plaintext parameter name and an encrypted parameter value. Parameter Store uses AWS KMS to encrypt and decrypt the parameter values of Secure String parameters. Also, if you are using customer-managed CMKs, you can use IAM policies and key policies to manage to encrypt and decrypt permissions. To retrieve the decrypted value you only need to do one API call.

via – https://docs.aws.amazon.com/kms/latest/developerguide/services-parameter-store.html

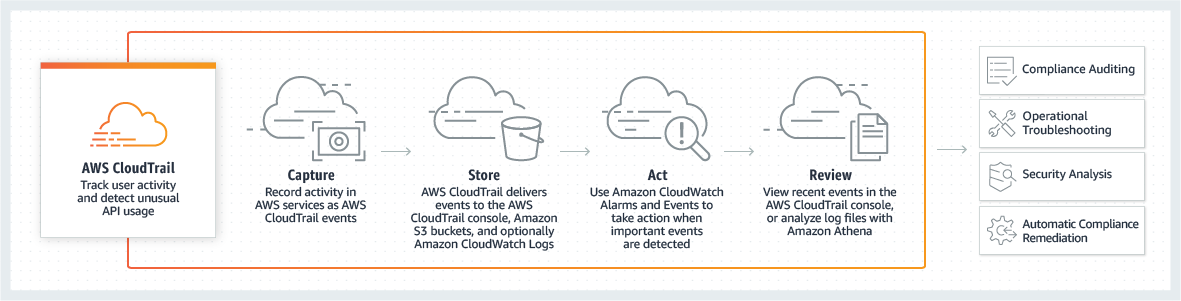

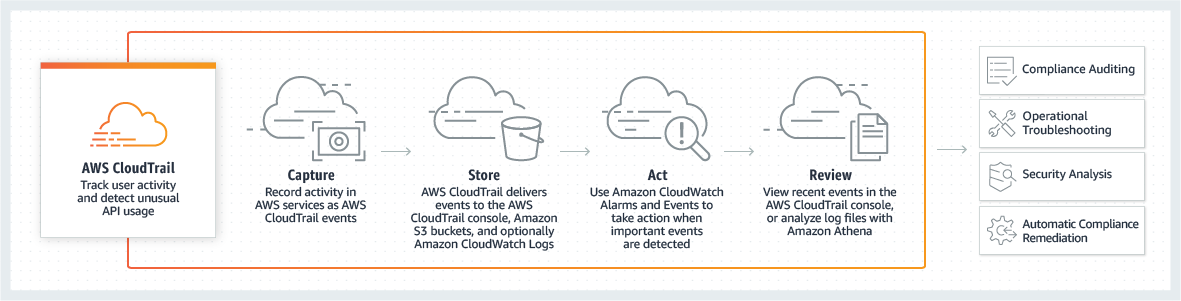

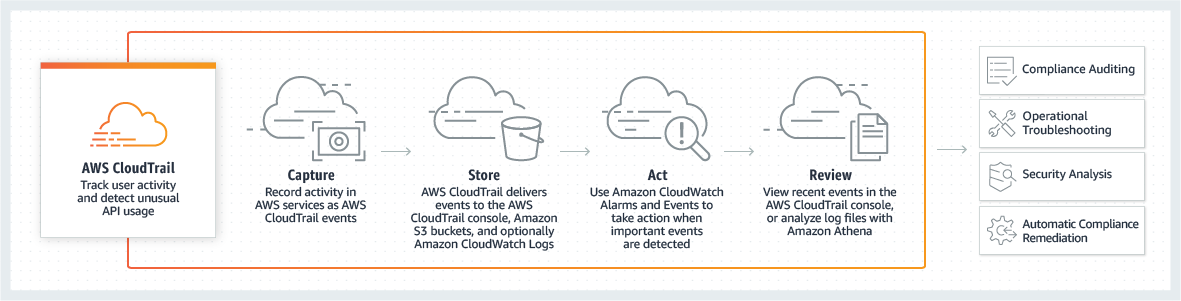

Audit using CloudTrail

AWS CloudTrail is a service that enables governance, compliance, operational auditing, and risk auditing of your AWS account. With CloudTrail, you can log, continuously monitor, and retain account activity related to actions across your AWS infrastructure. CloudTrail provides an event history of your AWS account activity, including actions taken through the AWS Management Console, AWS SDKs, command-line tools, and other AWS services.

CloudTrail will allow you to see all API calls made to SSM and KMS.

Incorrect options:

Encrypt first with KMS then store in SSM Parameter store – This could work but will require two API calls to get the decrypted value instead of one. So this is not the right option.

Store the secret as PlainText in SSM Parameter Store – Plaintext parameters are not secure and shouldn‘t be used to store secrets.

Audit using SSM Audit Trail – This is a made-up option and has been added as a distractor.

Reference:

https://docs.aws.amazon.com/kms/latest/developerguide/services-parameter-store.htmlIncorrect

Store the secret as SecureString in SSM Parameter Store

With AWS Systems Manager Parameter Store, you can create SecureString parameters, which are parameters that have a plaintext parameter name and an encrypted parameter value. Parameter Store uses AWS KMS to encrypt and decrypt the parameter values of Secure String parameters. Also, if you are using customer-managed CMKs, you can use IAM policies and key policies to manage to encrypt and decrypt permissions. To retrieve the decrypted value you only need to do one API call.

via – https://docs.aws.amazon.com/kms/latest/developerguide/services-parameter-store.html

Audit using CloudTrail

AWS CloudTrail is a service that enables governance, compliance, operational auditing, and risk auditing of your AWS account. With CloudTrail, you can log, continuously monitor, and retain account activity related to actions across your AWS infrastructure. CloudTrail provides an event history of your AWS account activity, including actions taken through the AWS Management Console, AWS SDKs, command-line tools, and other AWS services.

CloudTrail will allow you to see all API calls made to SSM and KMS.

Incorrect options:

Encrypt first with KMS then store in SSM Parameter store – This could work but will require two API calls to get the decrypted value instead of one. So this is not the right option.

Store the secret as PlainText in SSM Parameter Store – Plaintext parameters are not secure and shouldn‘t be used to store secrets.

Audit using SSM Audit Trail – This is a made-up option and has been added as a distractor.

Reference:

https://docs.aws.amazon.com/kms/latest/developerguide/services-parameter-store.htmlUnattempted

Store the secret as SecureString in SSM Parameter Store

With AWS Systems Manager Parameter Store, you can create SecureString parameters, which are parameters that have a plaintext parameter name and an encrypted parameter value. Parameter Store uses AWS KMS to encrypt and decrypt the parameter values of Secure String parameters. Also, if you are using customer-managed CMKs, you can use IAM policies and key policies to manage to encrypt and decrypt permissions. To retrieve the decrypted value you only need to do one API call.

via – https://docs.aws.amazon.com/kms/latest/developerguide/services-parameter-store.html

Audit using CloudTrail

AWS CloudTrail is a service that enables governance, compliance, operational auditing, and risk auditing of your AWS account. With CloudTrail, you can log, continuously monitor, and retain account activity related to actions across your AWS infrastructure. CloudTrail provides an event history of your AWS account activity, including actions taken through the AWS Management Console, AWS SDKs, command-line tools, and other AWS services.

CloudTrail will allow you to see all API calls made to SSM and KMS.

Incorrect options:

Encrypt first with KMS then store in SSM Parameter store – This could work but will require two API calls to get the decrypted value instead of one. So this is not the right option.

Store the secret as PlainText in SSM Parameter Store – Plaintext parameters are not secure and shouldn‘t be used to store secrets.

Audit using SSM Audit Trail – This is a made-up option and has been added as a distractor.

Reference:

https://docs.aws.amazon.com/kms/latest/developerguide/services-parameter-store.html -

Question 9 of 10

9. Question

You have been hired at a company that needs an experienced developer to help with a continuous integration/continuous delivery (CI/CD) workflow on AWS. You configure the company’s workflow to run an AWS CodePipeline pipeline whenever the application’s source code changes in a repository hosted in AWS Code Commit and compiles source code with AWS Code Build. You are configuring ProjectArtifacts in your build stage.

Which of the following should you do?Correct

Give AWS CodeBuild permissions to upload the build output to your Amazon S3 bucket

If you choose ProjectArtifacts and your value type is S3 then the build project stores build output in Amazon Simple Storage Service (Amazon S3). For that, you will need to give AWS CodeBuild permissions to upload.

Incorrect options:

Configure AWS CodeBuild to store output artifacts on EC2 servers – EC2 servers are not a valid output location, so this option is ruled out.

Give AWS CodeCommit permissions to upload the build output to your Amazon S3 bucket – AWS CodeCommit is the repository that holds source code and has no control over compiling the source code, so this option is incorrect.

Contact AWS Support to allow AWS CodePipeline to manage build outputs – You can set AWS CodePipeline to manage its build output locations instead of AWS CodeBuild. There is no need to contact AWS Support.

Reference:

https://docs.aws.amazon.com/codebuild/latest/userguide/create-project.html#create-project-cliIncorrect

Give AWS CodeBuild permissions to upload the build output to your Amazon S3 bucket

If you choose ProjectArtifacts and your value type is S3 then the build project stores build output in Amazon Simple Storage Service (Amazon S3). For that, you will need to give AWS CodeBuild permissions to upload.

Incorrect options:

Configure AWS CodeBuild to store output artifacts on EC2 servers – EC2 servers are not a valid output location, so this option is ruled out.

Give AWS CodeCommit permissions to upload the build output to your Amazon S3 bucket – AWS CodeCommit is the repository that holds source code and has no control over compiling the source code, so this option is incorrect.

Contact AWS Support to allow AWS CodePipeline to manage build outputs – You can set AWS CodePipeline to manage its build output locations instead of AWS CodeBuild. There is no need to contact AWS Support.

Reference:

https://docs.aws.amazon.com/codebuild/latest/userguide/create-project.html#create-project-cliUnattempted

Give AWS CodeBuild permissions to upload the build output to your Amazon S3 bucket

If you choose ProjectArtifacts and your value type is S3 then the build project stores build output in Amazon Simple Storage Service (Amazon S3). For that, you will need to give AWS CodeBuild permissions to upload.

Incorrect options:

Configure AWS CodeBuild to store output artifacts on EC2 servers – EC2 servers are not a valid output location, so this option is ruled out.

Give AWS CodeCommit permissions to upload the build output to your Amazon S3 bucket – AWS CodeCommit is the repository that holds source code and has no control over compiling the source code, so this option is incorrect.

Contact AWS Support to allow AWS CodePipeline to manage build outputs – You can set AWS CodePipeline to manage its build output locations instead of AWS CodeBuild. There is no need to contact AWS Support.

Reference:

https://docs.aws.amazon.com/codebuild/latest/userguide/create-project.html#create-project-cli -

Question 10 of 10

10. Question

You are getting ready for an event to show off your Alexa skill written in JavaScript. As you are testing your voice activation commands you find that some intents are not invoking as they should and you are struggling to figure out what is happening. You included the following code console.log(JSON.stringify(this.event)) in hopes of getting more details about the request to your Alexa skill.

You would like the logs stored in an Amazon Simple Storage Service (S3) bucket named MyAlexaLog. How do you achieve this?Correct

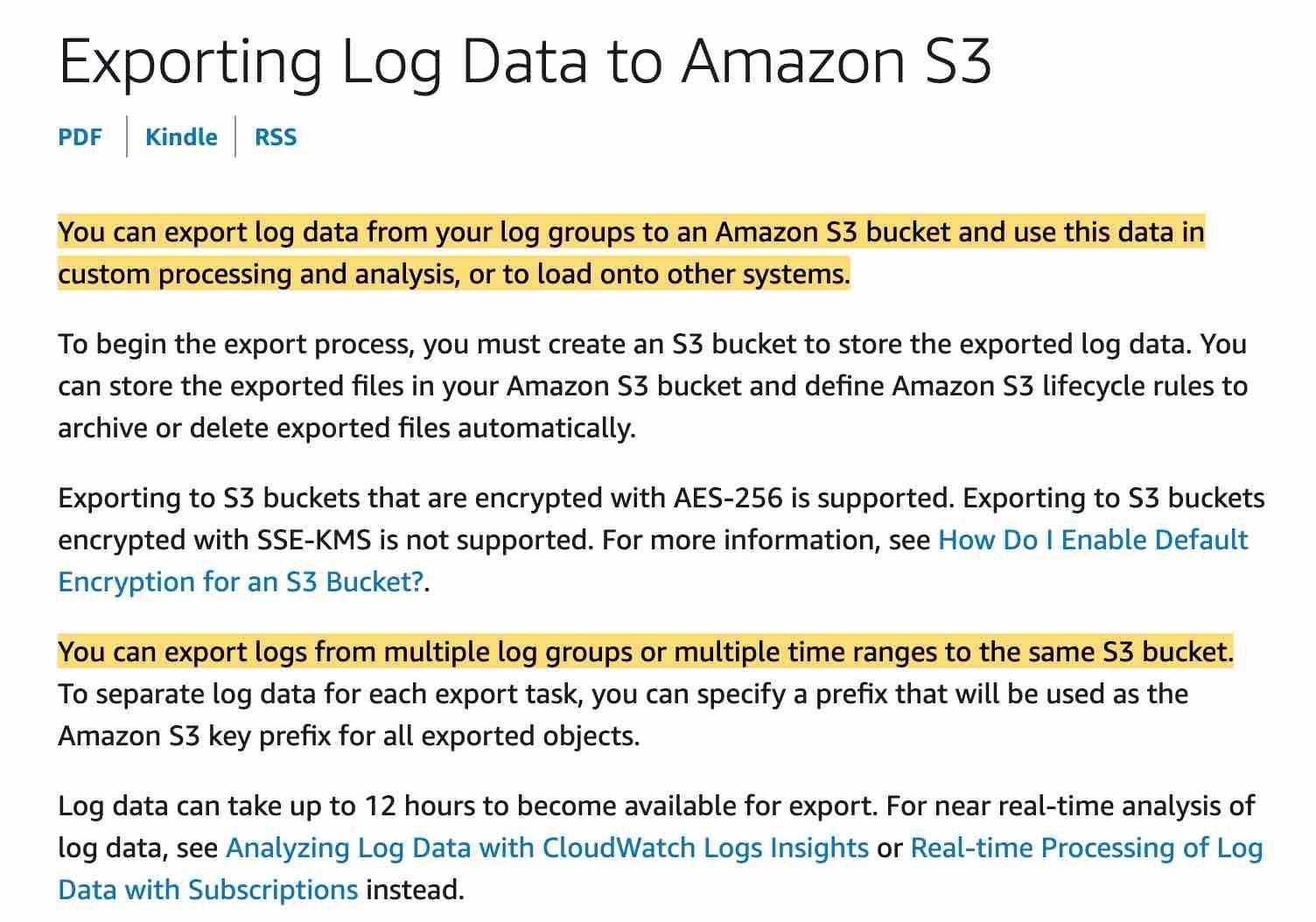

Use CloudWatch integration feature with S3

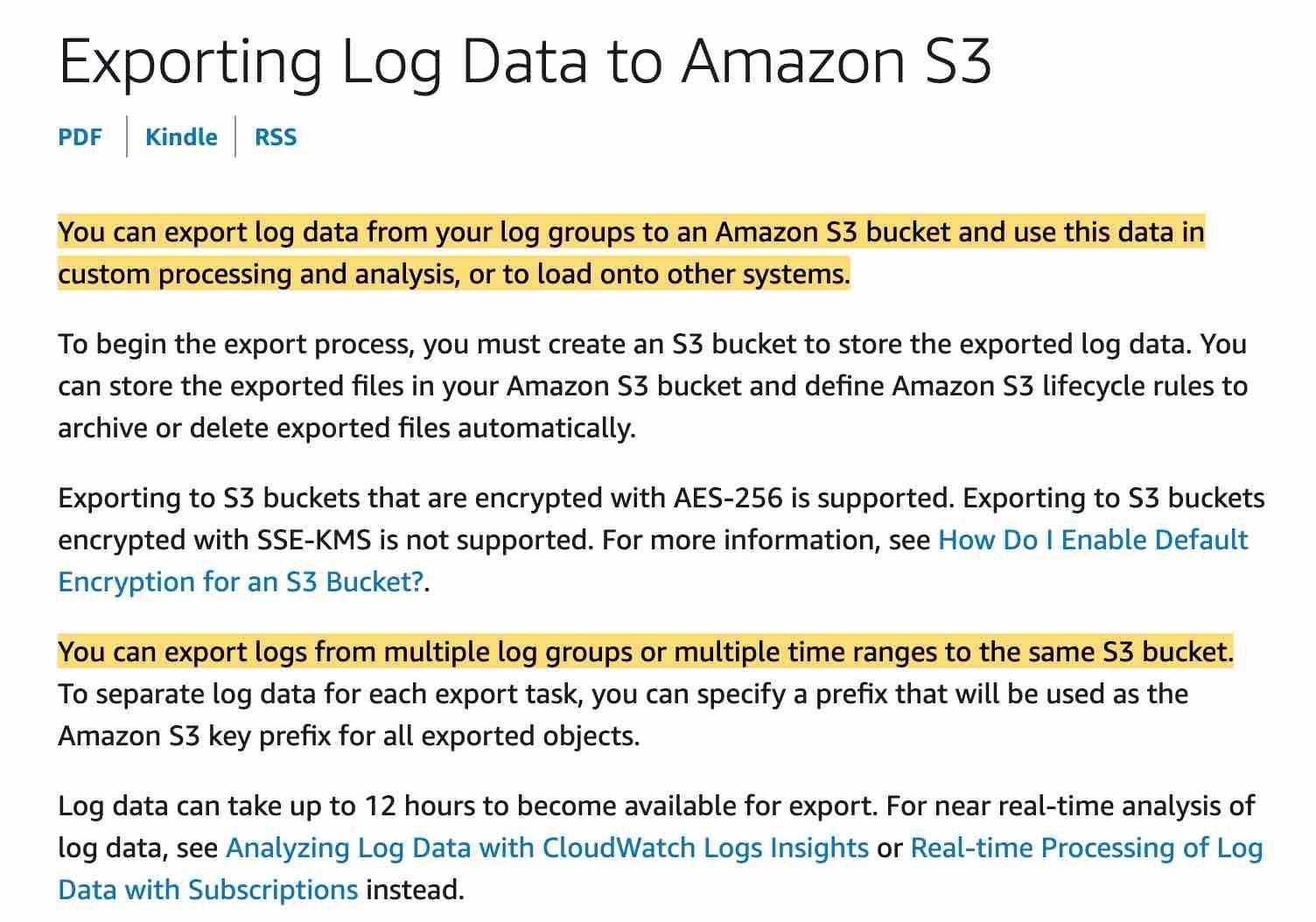

You can export log data from your CloudWatch log groups to an Amazon S3 bucket and use this data in custom processing and analysis, or to load onto other systems.

Exporting CloudWatch Log Data to Amazon S3:

via – https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3Export.html

Incorrect options:

Use CloudWatch integration feature with Kinesis – You can use both to do custom processing or analysis but with S3 you don‘t have to process anything. Instead, you configure the CloudWatch settings to send logs to S3.

Use CloudWatch integration feature with Lambda – You can use both to do custom processing or analysis but with S3 you don‘t have to process anything. Instead, you configure the CloudWatch settings to send logs to S3.

Use CloudWatch integration feature with Glue – AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy for customers to prepare and load their data for analytics. Glue is not the right fit for the given use-case.

Reference:

https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3Export.htmlIncorrect

Use CloudWatch integration feature with S3

You can export log data from your CloudWatch log groups to an Amazon S3 bucket and use this data in custom processing and analysis, or to load onto other systems.

Exporting CloudWatch Log Data to Amazon S3:

via – https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3Export.html

Incorrect options:

Use CloudWatch integration feature with Kinesis – You can use both to do custom processing or analysis but with S3 you don‘t have to process anything. Instead, you configure the CloudWatch settings to send logs to S3.

Use CloudWatch integration feature with Lambda – You can use both to do custom processing or analysis but with S3 you don‘t have to process anything. Instead, you configure the CloudWatch settings to send logs to S3.

Use CloudWatch integration feature with Glue – AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy for customers to prepare and load their data for analytics. Glue is not the right fit for the given use-case.

Reference:

https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3Export.htmlUnattempted

Use CloudWatch integration feature with S3

You can export log data from your CloudWatch log groups to an Amazon S3 bucket and use this data in custom processing and analysis, or to load onto other systems.

Exporting CloudWatch Log Data to Amazon S3:

via – https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3Export.html

Incorrect options:

Use CloudWatch integration feature with Kinesis – You can use both to do custom processing or analysis but with S3 you don‘t have to process anything. Instead, you configure the CloudWatch settings to send logs to S3.

Use CloudWatch integration feature with Lambda – You can use both to do custom processing or analysis but with S3 you don‘t have to process anything. Instead, you configure the CloudWatch settings to send logs to S3.

Use CloudWatch integration feature with Glue – AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy for customers to prepare and load their data for analytics. Glue is not the right fit for the given use-case.

Reference:

https://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/S3Export.html

The AWS Certified Developer – Associate examination is intended for individuals who perform a development role and have one or more years of hands-on experience developing and maintaining an AWS-based application.

SkillCertPro Offerings (Instructor Note) :

- We are offering AWS Certified Developer Associate (DVA-C02) Exam Questions 1400 latest real exam questions for practice, which will help you to score higher in your exam.

- Aim for above 85% or above in our mock exams before giving the main exam.

- Do review wrong & right answers and thoroughly go through explanations provided to each question which will help you understand the question.

- Master Cheat Sheet was prepared by instructors which contain personal notes of them for all exam objectives. Carefully written to help you all understand the topics easily.

- It is recommended to use the Master Cheat Sheet just before 2-3 days of the main exam to cram the important notes.

Abilities Validated by the Certification

- Demonstrate an understanding of core AWS services, uses, and basic AWS architecture best practices

- Demonstrate proficiency in developing, deploying, and debugging cloud-based applications using AWS

Recommended Knowledge and Experience for AWS Certified Developer Associate (DVA-C02) Exam Questions

- In-depth knowledge of at least one high-level programming language

- Understanding of core AWS services, uses, and basic AWS architecture best practices

- Proficiency in developing, deploying, and debugging cloud-based applications using AWS

- Ability to use the AWS service APIs, AWS CLI, and SDKs to write applications

- Ability to identify key features of AWS services

- Understanding of the AWS shared responsibility model

- Understanding of application lifecycle management

- Ability to use a CI/CD pipeline to deploy applications on AWS

- Ability to use or interact with AWS services

- Ability to apply a basic understanding of cloud-native applications to write code

- Ability to write code using AWS security best practices (e.g., not using secret and access keys in the code, instead using IAM roles)

- Ability to author, maintain, and debug code modules on AWS

- Proficiency writing code for serverless applications

- Understanding of the use of containers in the development process

Alexander Parrales –

I passed my Developer associate exam and a big part of this success goes to this course. This practice test is awesome. Its design, content, flow, structure are very much similar to real AWS exams. It helped me filling gap in my knowledge which I learnt. The review part after the exam provides you detail insights and help you how your thought process should be when choosing right answer. I can’t imagine the efforts and time spent on this course. A great thanks to skillcertpro.

Priya Lakshmi T –

I recently passed my AWS Developer Associate exam. And I have used three practice tests package (Udemy, TutorialDojo and SkillCert Pro). I found that SkillCert’s material was the closest to the actual exam, In terms of the areas from which the questions are from. Very useful and highly recommended.

Abhishek Joshi –

This course is excellent. I passed the exam with the help of these practice exams. Few of these questions did come up in the exam. But definitely helpful. Highly recommended.

Saravanakumar Kolandaivel –

This course is very good. I have cleared the exam yesterday. My recommendation is, please don’t expect the same questions in the course. These 950 questions are not just for memorizing but to understand the concepts behind them. Just read the full explanation after answering each question. That really helps in the exam. Except a hand full of questions, all others will make you think twice to select the correct answer.

Ashutosh Jatale –

I want to buy this course but just want to check are the answers reliable ? as in other sites questions are there but answers are not correct.

SkillCertPro –

We do provide answers and explanations for all the questions we provide. We have certified instructors who will perform quality checks before we bring any course online.

Anchal Taatya –

Though I covered entire syllabus for AWS developer associate exam via video course, these practice tests helped me to identify the gaps in learning !!! Cheat sheet is immensely helpful too. Thank you very much. Passed !!!!

Don Bradcron –

Very good coverage of all the topics and relevant questions in the exams. Though all questions are not exactly similar but the content/material provided in the end helps a lot. I was able to clear my exam few days back, and the material helped a lot.

Anamika Sh –

The practice tests were perfect prep. I do think the actual Cert was more difficult, but the practice tests help me identify areas I didn’t have good understanding and to fix it. Passed with 850

Di Fei Chen –

Hi, I just purchaed this practice exam and I would like to ask is the latest version of the test associated with the latest real quesions? For example, test 22 is the latest and test 1 is the oldest?

Skillcertpro Instructor –

Yes thats right. Sets at the end are the latest.

Jimmy Chin –

For sure, I must agree that this is one of the best practice tests that i had. Please remember that all questions in main exam will not be exactly same like how these are here (You can expect similar questions too). But the concepts you learn after taking this practice tests will surely help you in clearing the exam. This is a must course if you want to clear the exam on first attempt.

Nanda Kumar –

Highly recommended. You could take other courses to learn about different AWS services but this is “the one” that will give you the required push to go over the line. Great set of questions with some fabulous cheat sheets alongside.

Jad Collins –

Great resource! Practice tests are realistic and well written overall. The level of detail in the explanations is fantastic and would recommend this course if you are planning to take this exam. While the course it awesome, I would also suggest using other resources to help fill in your knowledge gaps. Keep up the good work Skillcertpro!

Paul Carlos –

The first time I took these practice exams . I like the explanations on the answers of the ones I missed and also the ones I got right to reinforce the concepts. These exams gave me a good feel for what to expect on the exam. I’m now AWS Certified. Thank you!

R Manikandan –

I got a 988/1000, and the practice test questions definitely helped me. I appreciate the efforts of the team who works behind to put forward the credible questions forward. Thank you.

Donato Ricardo –

Excellent practice papers. I cleared the AWS Developer Associate yesterday and it was slightly easier than the practice papers (only slightly).

Unfortunately, I had time only to do 2 of the 6 practice papers before the exam. But I did go through the answers and explanation in detail which helped me a lot.

For those taking the exams, I suggest you keep the final one week of your preparations only for these practice tests (take them multiple times if you can – many questions in the exam appear exactly like in the practice papers).

All in all, very well prepared practice papers and worth the money. Many thanks to the author as it helped me clear the certification.

Priyanka Chaudhary –

Recently gave my aws developer exam. Many questions came from this practice test. However most importantly as certifications become tougher, no course or online material can provide full coverage. but skillcertpro breadth of questions along with the links to the online materials helped to read and understand many advance concepts. I passed my exam with 910 marks.

Shreyas Udupa –

I just completed taking up the actual exam and I am very impressed with skillcertpro almost 40-45 question came out of 60 questions and I passed the exam with 980/1000 marks after solving the your exams. Thanks Skillcertpro!!

Haritha G –

Wow, its really a Great thing, this give you a 100% result, i scored 800.

Learning Plan:

1.Complete AWS free training first, this will give you concepts to understand.

2. Complete this Skillcertpro test at least 5 times. Read all explanations.

you are good to go!

Doshimayur79 –

The questions in each of the papers are very important and i experienced that most of them appeared in the Aws Developer exam test.

Also the explanations in the answers are quite detailed and helpful.

My suggestion is first learn the concepts and then solve these question papers and you are all set to score really well .

Keerthi Vanaparthi –

Testing your knowledge against this many questions is very helpful. I’m glad I got this course. It covers a lot of content.

However, there is definitely room for improvement, which is why I knocked a star. I felt there were few cases where the questions and explanations could be clearer.

I’d still recommend the course. Passed my exam.

Jasvinder Bawa –

These practice tests were perfect preparation for the exam and are very comprehensive.

I’ll recommend the course and was able to pass the exam.

Thanks a lot for preparing for the exam.

Jeff Lynch –

These practice exams are very comprehensive. All of those questions are look like they are coming directly from the AWS team. The best thing about this practice exam is, all the options are explained pretty well. Even wrong answers are explained pretty well. So at the end of the exam, you can go to review your test & see where you got wrong. Thank you, Skillcertpro for such a good set of practice exams. I am 100% sure to pass my exam.

James Bates –

Very good

Mangal Parekh –

The questions on these tests were very similar in style to those on the actual exam and the detailed answer key was great! With these and some studying, I was able to pass the developer exam on the first try with a score of 902. Thanks!

Lisa sPisoni –

Thank you for a great set of practice tests! Went through the AWS free videos and did these tests 7 days before the real exam.

Just got my scores back (24 hours after completing the exam). I scored 929/1000. Not sure how many you have to get wrong to get 929. I think the ones I got wrong were because of tricky wording. I wasn’t 100% sure on about 8 of the exam questions.

These are great practice tests that covered everything on my exam.

Amit Borkar –

The course had good information that definitely helped me to pass the exam. My only disappointment was that the 5% of questions had very minimal information in the explanations. rest all had very good explanations about the answers. Definitely recommended if clearing the exam is your goal.

Divyang Mehta –

The best practice test I have found. I have passed my exam on 2nd April 2025 with the score 818. I have learned all topics and understand all those services usage, also I have practiced 2 times all these test and my avg score was 75% in this series. The actual test contains 50% same but questions but others are with combined topics. for ex. question ask about cache solution with other topic too. Overall my experience was superb with SkillCertpro test series which helped me a lot.

Meet Sheth –

I have Cleared Exam on 12th August and this is my first AWS certification and want to thanks to this exam. I have go through different practice exam and I finally decided to buy this . 5-6 questions are same from this test asked in actual exam. Took all the exams and made notes from the solutions and that helped me a lot.

Sayani Mitra –

Very Good Test series to pass the examination with high grades. I have got 943/1000. But before you go through this it is advised you should go thoroughly with the certified Developer associate video Course.

Karthik Boopathy –

Each successive practice test ratchets it up a bit over the previous test, providing a great level of challenge and introducing new topics beyond even the course itself, covering items that are confirmed to be necessary for the actual exam! Skillcertpro tests are the gold standard among practice tests. They’re difficult, but they really help!